Experiment with PDF generation

Signed-off-by: David Young <davidy@funkypenguin.co.nz>

6

docs/.gitpod.yml

Normal file

@@ -0,0 +1,6 @@

|

||||

# image: squidfunk/mkdocs-material

|

||||

tasks:

|

||||

- init: pip install -r requirements.txt

|

||||

ports:

|

||||

- port: 8000

|

||||

onOpen: open-preview

|

||||

141

docs/community/code-of-conduct.md

Normal file

@@ -0,0 +1,141 @@

|

||||

---

|

||||

title: Community Code of Conduct

|

||||

description: We as members, contributors, and leaders pledge to make participation in our community a harassment-free experience for everyone, regardless of age, body size, visible or invisible disability, ethnicity, sex characteristics, gender identity and expression, level of experience, education, socio-economic status, nationality, personal appearance, race, religion, or sexual identity and orientation.

|

||||

---

|

||||

# Code of Conduct

|

||||

|

||||

Inspired by the leadership of other [great open source projects](https://www.contributor-covenant.org/adopters/), we've adopted the [Contributor Covenant Code of Conduct](https://www.contributor-covenant.org/) (*below*).

|

||||

|

||||

Details re the implementation of the enforcement guidelines outlined below can be found in the pages detailing each of our community environments:

|

||||

|

||||

* [Discord](/community/discord/)

|

||||

* Discourse (coming soon)

|

||||

* GitHub (coming soon)

|

||||

|

||||

## Our Pledge

|

||||

|

||||

We as members, contributors, and leaders pledge to make participation in our

|

||||

community a harassment-free experience for everyone, regardless of age, body

|

||||

size, visible or invisible disability, ethnicity, sex characteristics, gender

|

||||

identity and expression, level of experience, education, socio-economic status,

|

||||

nationality, personal appearance, race, religion, or sexual identity

|

||||

and orientation.

|

||||

|

||||

We pledge to act and interact in ways that contribute to an open, welcoming,

|

||||

diverse, inclusive, and healthy community.

|

||||

|

||||

## Our Standards

|

||||

|

||||

Examples of behavior that contributes to a positive environment for our

|

||||

community include:

|

||||

|

||||

* Demonstrating empathy and kindness toward other people

|

||||

* Being respectful of differing opinions, viewpoints, and experiences

|

||||

* Giving and gracefully accepting constructive feedback

|

||||

* Accepting responsibility and apologizing to those affected by our mistakes,

|

||||

and learning from the experience

|

||||

* Focusing on what is best not just for us as individuals, but for the

|

||||

overall community

|

||||

|

||||

Examples of unacceptable behavior include:

|

||||

|

||||

* The use of sexualized language or imagery, and sexual attention or

|

||||

advances of any kind

|

||||

* Trolling, insulting or derogatory comments, and personal or political attacks

|

||||

* Public or private harassment

|

||||

* Publishing others' private information, such as a physical or email

|

||||

address, without their explicit permission

|

||||

* Other conduct which could reasonably be considered inappropriate in a

|

||||

professional setting

|

||||

|

||||

## Enforcement Responsibilities

|

||||

|

||||

Community leaders are responsible for clarifying and enforcing our standards of

|

||||

acceptable behavior and will take appropriate and fair corrective action in

|

||||

response to any behavior that they deem inappropriate, threatening, offensive,

|

||||

or harmful.

|

||||

|

||||

Community leaders have the right and responsibility to remove, edit, or reject

|

||||

comments, commits, code, wiki edits, issues, and other contributions that are

|

||||

not aligned to this Code of Conduct, and will communicate reasons for moderation

|

||||

decisions when appropriate.

|

||||

|

||||

## Scope

|

||||

|

||||

This Code of Conduct applies within all community spaces, and also applies when

|

||||

an individual is officially representing the community in public spaces.

|

||||

Examples of representing our community include using an official e-mail address,

|

||||

posting via an official social media account, or acting as an appointed

|

||||

representative at an online or offline event.

|

||||

|

||||

## Enforcement

|

||||

|

||||

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

||||

reported to the community leaders responsible for enforcement at

|

||||

abuse@funkypenguin.co.nz.

|

||||

|

||||

All complaints will be reviewed and investigated promptly and fairly.

|

||||

|

||||

All community leaders are obligated to respect the privacy and security of the

|

||||

reporter of any incident.

|

||||

|

||||

## Enforcement Guidelines

|

||||

|

||||

Community leaders will follow these Community Impact Guidelines in determining

|

||||

the consequences for any action they deem in violation of this Code of Conduct:

|

||||

|

||||

### 1. Correction

|

||||

|

||||

**Community Impact**: Use of inappropriate language or other behavior deemed

|

||||

unprofessional or unwelcome in the community.

|

||||

|

||||

**Consequence**: A private, written warning from community leaders, providing

|

||||

clarity around the nature of the violation and an explanation of why the

|

||||

behavior was inappropriate. A public apology may be requested.

|

||||

|

||||

### 2. Warning

|

||||

|

||||

**Community Impact**: A violation through a single incident or series

|

||||

of actions.

|

||||

|

||||

**Consequence**: A warning with consequences for continued behavior. No

|

||||

interaction with the people involved, including unsolicited interaction with

|

||||

those enforcing the Code of Conduct, for a specified period of time. This

|

||||

includes avoiding interactions in community spaces as well as external channels

|

||||

like social media. Violating these terms may lead to a temporary or

|

||||

permanent ban.

|

||||

|

||||

### 3. Temporary Ban

|

||||

|

||||

**Community Impact**: A serious violation of community standards, including

|

||||

sustained inappropriate behavior.

|

||||

|

||||

**Consequence**: A temporary ban from any sort of interaction or public

|

||||

communication with the community for a specified period of time. No public or

|

||||

private interaction with the people involved, including unsolicited interaction

|

||||

with those enforcing the Code of Conduct, is allowed during this period.

|

||||

Violating these terms may lead to a permanent ban.

|

||||

|

||||

### 4. Permanent Ban

|

||||

|

||||

**Community Impact**: Demonstrating a pattern of violation of community

|

||||

standards, including sustained inappropriate behavior, harassment of an

|

||||

individual, or aggression toward or disparagement of classes of individuals.

|

||||

|

||||

**Consequence**: A permanent ban from any sort of public interaction within

|

||||

the community.

|

||||

|

||||

## Attribution

|

||||

|

||||

This Code of Conduct is adapted from the [Contributor Covenant][homepage],

|

||||

version 2.0, available at

|

||||

<https://www.contributor-covenant.org/version/2/0/code_of_conduct.html>.

|

||||

|

||||

Community Impact Guidelines were inspired by [Mozilla's code of conduct

|

||||

enforcement ladder](https://github.com/mozilla/diversity).

|

||||

|

||||

[homepage]: https://www.contributor-covenant.org

|

||||

|

||||

For answers to common questions about this code of conduct, see the FAQ at

|

||||

<https://www.contributor-covenant.org/faq>. Translations are available at

|

||||

<https://www.contributor-covenant.org/translations>.

|

||||

70

docs/community/contribute.md

Normal file

@@ -0,0 +1,70 @@

|

||||

---

|

||||

title: How to contribute to Geek Cookbook

|

||||

description: Loving the geeky recipes, and looking for a way to give back / get involved. It's not all coding - here are some ideas re various ways you can be involved!

|

||||

---

|

||||

# Contribute

|

||||

|

||||

## Spread the word ❤️

|

||||

|

||||

Got nothing to contribute, but want to give back to the community? Here are some ideas:

|

||||

|

||||

1. Star :star: the [repo](https://github.com/geek-cookbook/geek-cookbook/)

|

||||

2. Tweet :bird: the [meat](https://ctt.ac/Vl6mc)!

|

||||

|

||||

## Contributing moneyz 💰

|

||||

|

||||

Sponsor [your chef](https://github.com/sponsors/funkypenguin) :heart:, or [join us](/#sponsored-projects) in supporting the open-source projects we enjoy!

|

||||

|

||||

## Contributing bugfixorz 🐛

|

||||

|

||||

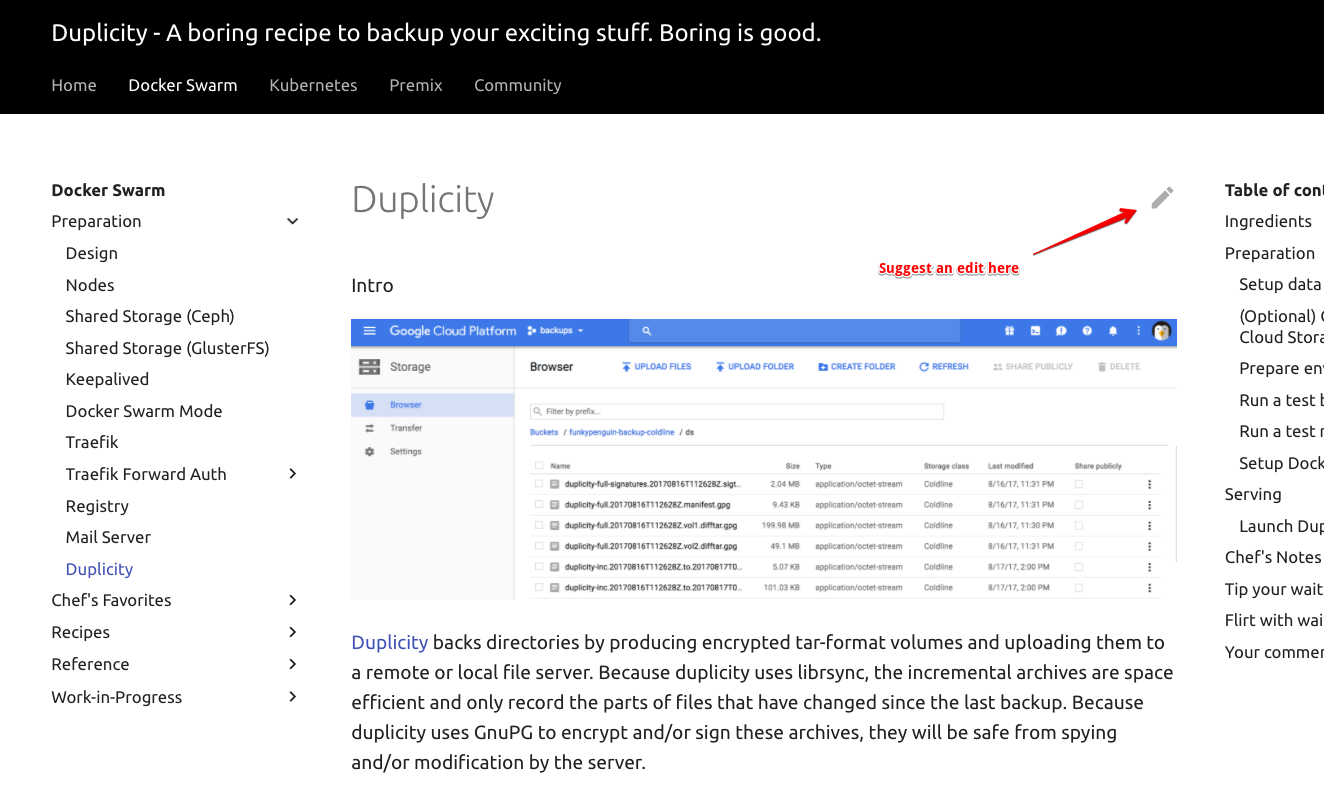

Found a typo / error in a recipe? Each recipe includes a link to make the fix, directly on GitHub:

|

||||

|

||||

{ loading=lazy }

|

||||

|

||||

Click the link to edit the recipe in Markdown format, and save to create a pull request!

|

||||

|

||||

Here's a [113-second video](https://static.funkypenguin.co.nz/how-to-contribute-to-geek-cookbook-quick-pr.mp4) illustrating the process!

|

||||

|

||||

## Contributing recipes 🎁

|

||||

|

||||

Want to contributing an entirely new recipe? Awesome!

|

||||

|

||||

For the best experience, start by [creating an issue](https://github.com/geek-cookbook/geek-cookbook/issues/) in the repo (*check whether an existing issue for this recipe exists too!*). Populating the issue template will flesh out the requirements for the recipe, and having the new recipe pre-approved will avoid wasted effort if the recipe _doesn't_ meet requirements for addition, for some reason (*i.e., if it's been superceded by an existing recipe*)

|

||||

|

||||

Once your issue has been reviewed and approved, start working on a PR using either GitHub Codespaces or local dev (below). As soon as you're ready to share your work, create a WIP PR, so that a preview URL will be generated. Iterate on your PR, marking it as ready for review when it's ... ready :grin:

|

||||

|

||||

### 🏆 GitPod

|

||||

|

||||

GitPod (free up to 50h/month) is by far the smoothest and most slick way to edit the cookbook. Click [here](https://gitpod.io/#https://github.com/geek-cookbook/geek-cookbook) to launch a browser-based editing session! 🥷

|

||||

|

||||

### 🥈 GitHub Codespaces

|

||||

|

||||

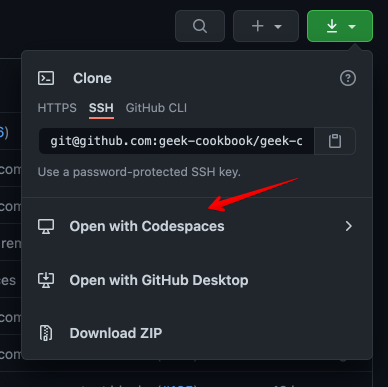

[GitHub Codespaces](https://github.com/features/codespaces) (_no longer free now that it's out of beta_) provides a browser-based VSCode interface, pre-configured for your development environment. For no-hassle contributions to the cookbook with realtime previews, visit the [repo](https://github.com/geek-cookbook/geek-cookbook), and when clicking the download button (*where you're usually get the URL to clone a repo*), click on "**Open with CodeSpaces**" instead:

|

||||

|

||||

{ loading=lazy }

|

||||

|

||||

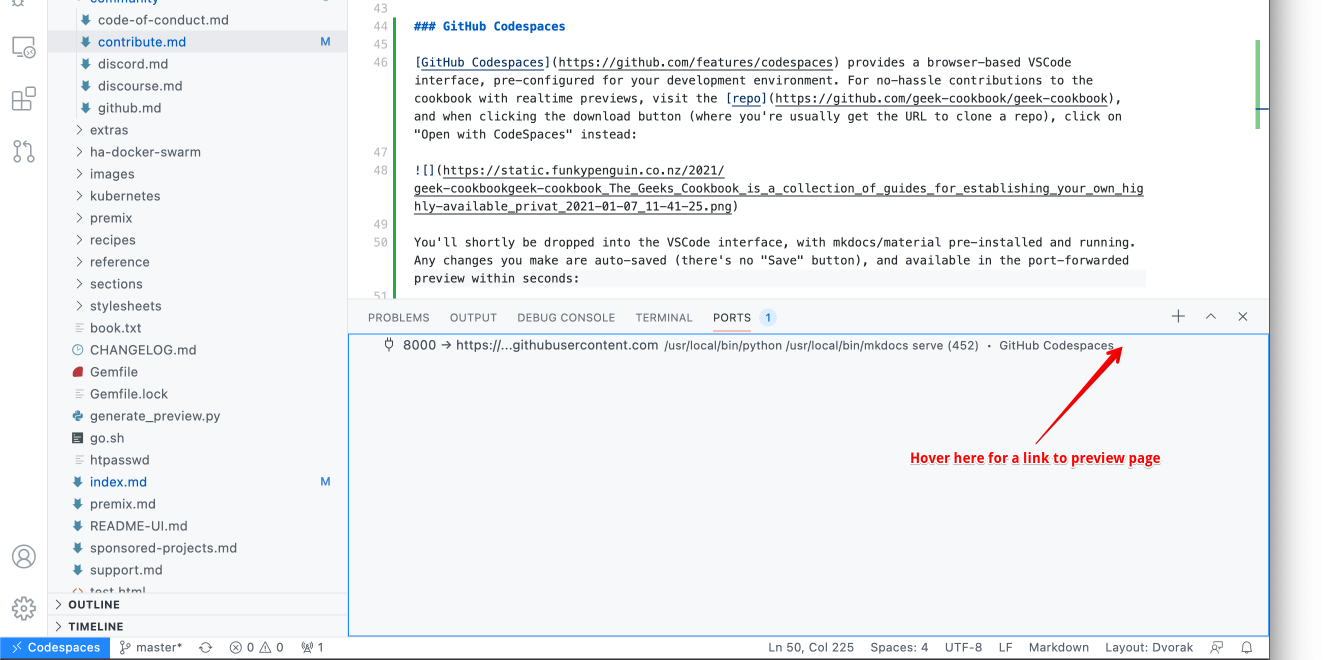

You'll shortly be dropped into the VSCode interface, with mkdocs/material pre-installed and running. Any changes you make are auto-saved (*there's no "Save" button*), and available in the port-forwarded preview within seconds:

|

||||

|

||||

{ loading=lazy }

|

||||

|

||||

Once happy with your changes, drive VSCode as normal to create a branch, commit, push, and create a pull request. You can also abandon the browser window at any time, and return later to pick up where you left off (*even on a different device!*)

|

||||

|

||||

### 🥉 Editing locally

|

||||

|

||||

The process is basically:

|

||||

|

||||

1. [Fork the repo](https://help.github.com/en/github/getting-started-with-github/fork-a-repo)

|

||||

2. Clone your forked repo locally

|

||||

3. Make a new branch for your recipe (*not strictly necessary, but it helps to differentiate multiple in-flight recipes*)

|

||||

4. Create your new recipe as a markdown file within the existing structure of the [manuscript folder](https://github.com/geek-cookbook/geek-cookbook/tree/master/manuscript)

|

||||

5. Add your recipe to the navigation by editing [mkdocs.yml](https://github.com/geek-cookbook/geek-cookbook/blob/master/mkdocs.yml#L32)

|

||||

6. Test locally by running `./scripts/serve.sh` in the repo folder (*this launches a preview in Docker*), and navigating to <http://localhost:8123>

|

||||

7. Rinse and repeat until you're ready to submit a PR

|

||||

8. Create a pull request via the GitHub UI

|

||||

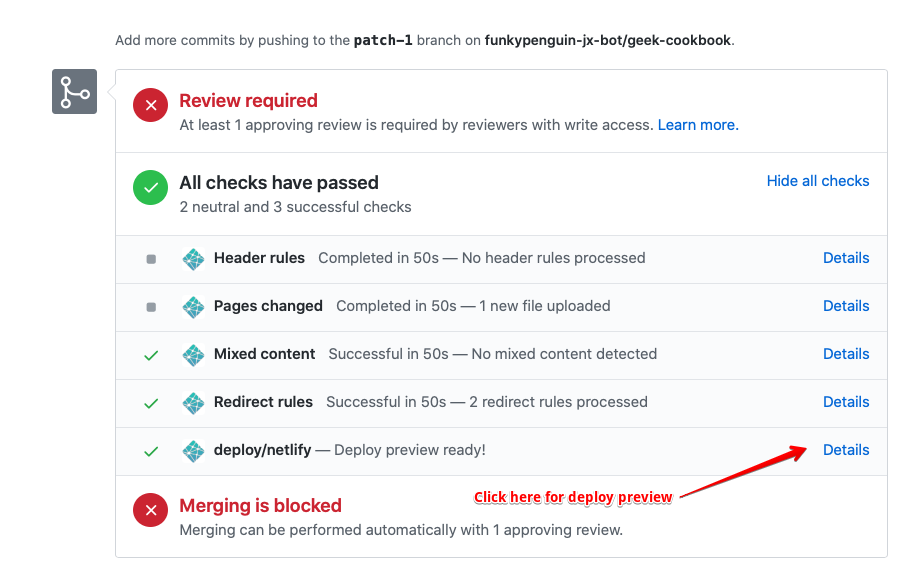

9. The pull request will trigger the creation of a preview environment, as illustrated below. Use the deploy preview to confirm that your recipe is as tasty as possible!

|

||||

|

||||

{ loading=lazy }

|

||||

|

||||

## Contributing skillz 💪

|

||||

|

||||

Got mad skillz, but neither the time nor inclination for recipe-cooking? [Scan the GitHub contributions page](https://github.com/geek-cookbook/geek-cookbook/contribute), [Discussions](https://github.com/geek-cookbook/geek-cookbook/discussions), or jump into [Discord](/community/discord/) or [Discourse](/community/discourse/), and help your fellow geeks with their questions, or just hang out bump up our member count!

|

||||

103

docs/community/discord.md

Normal file

@@ -0,0 +1,103 @@

|

||||

---

|

||||

title: Geek out with Funky Penguin's Discord Server

|

||||

description: The most realtime and exciting way engage with our geeky community is in our Discord server!

|

||||

icon: material/discord

|

||||

---

|

||||

# Discord

|

||||

|

||||

The most realtime and exciting way engage with our geeky community is in our [Discord server](http://chat.funkypenguin.co.nz)

|

||||

|

||||

<!-- markdownlint-disable MD033 -->

|

||||

<iframe src="https://e.widgetbot.io/channels/396055506072109067/456689991326760973" height="600" width="800"></iframe>

|

||||

|

||||

!!! question "Eh? What's a Discord? Get off my lawn, young whippersnappers!!"

|

||||

Yeah, I know. I also thought Discord was just for the gamer kids, but it turns out it's great for a geeky community. Why? [Let me elucidate ya.](https://www.youtube.com/watch?v=1qHoSWxVqtE)..

|

||||

|

||||

1. Native markdown for code blocks

|

||||

2. Drag-drop screenshots

|

||||

3. Costs nothing, no ads

|

||||

4. Mobile notifications are reliable, individual channels mutable, etc

|

||||

|

||||

## How do I join the Discord server?

|

||||

|

||||

1. Create [an account](https://discordapp.com)

|

||||

2. [Join the geek party](http://chat.funkypenguin.co.nz)!

|

||||

|

||||

## Code of Conduct

|

||||

|

||||

With the goal of creating a safe and inclusive community, we've adopted the [Contributor Covenant Code of Conduct](https://www.contributor-covenant.org/), as described [here](/community/code-of-conduct/).

|

||||

|

||||

### Reporting abuse

|

||||

|

||||

To report a violation of our code of conduct in our Discord server, type `!report <thing to report>` in any channel.

|

||||

|

||||

Your report message will immediately be deleted from the channel, and an alert raised to moderators, who will address the issue as detailed in the [enforcement guidelines](/community/code-of-conduct/#enforcement-guidelines).

|

||||

|

||||

## Channels

|

||||

|

||||

### 📔 Information

|

||||

|

||||

| Channel Name | Channel Use |

|

||||

|--------------------|------------------------------------------------------------|

|

||||

| #announcements | Used for important announcements |

|

||||

| #changelog | Used for major changes to the cookbook (to be deprecated) |

|

||||

| #cookbook-updates | Updates on all pushes to the master branch of the cookbook |

|

||||

| #premix-updates | Updates on all pushes to the master branch of the premix |

|

||||

| #discourse-updates | Updates to Discourse topics |

|

||||

|

||||

### 💬 Discussion

|

||||

|

||||

| Channel Name | Channel Use |

|

||||

|----------------|----------------------------------------------------------|

|

||||

| #introductions | New? Pop in here and say hi :) |

|

||||

| #general | General chat - anything goes |

|

||||

| #cookbook | Discussions specifically around the cookbook and recipes |

|

||||

| #kubernetes | Discussions about Kubernetes |

|

||||

| #docker-swarm | Discussions about Docker Swarm |

|

||||

| #today-i-learned | Post tips/tricks you've stumbled across

|

||||

| #jobs | For seeking / advertising jobs, bounties, projects, etc |

|

||||

| #advertisements | In here you can advertise your stream, services or websites, at a limit of 2 posts per day |

|

||||

| #dev | Used for collaboratio around current development. |

|

||||

|

||||

### Suggestions

|

||||

|

||||

| Channel Name | Channel Use |

|

||||

|--------------|-------------------------------------|

|

||||

| #in-flight | A list of all suggestions in-flight |

|

||||

| #completed | A list of completed suggestions |

|

||||

|

||||

### Music

|

||||

|

||||

| Channel Name | Channel Use |

|

||||

|------------------|-----------------------------------|

|

||||

| #music | DJs go here to control music |

|

||||

| #listen-to-music | Jump in here to rock out to music |

|

||||

|

||||

## How to get help.

|

||||

|

||||

If you need assistance at any time there are a few commands that you can run in order to get help.

|

||||

|

||||

`!help` Shows help content.

|

||||

|

||||

`!faq` Shows frequently asked questions.

|

||||

|

||||

## Spread the love (inviting others)

|

||||

|

||||

Invite your co-geeks to Discord by:

|

||||

|

||||

1. Sending them a link to <http://chat.funkypenguin.co.nz>, or

|

||||

2. Right-click on the Discord server name and click "Invite People"

|

||||

|

||||

## Formatting your message

|

||||

|

||||

Discord supports minimal message formatting using [markdown](https://support.discord.com/hc/en-us/articles/210298617-Markdown-Text-101-Chat-Formatting-Bold-Italic-Underline-).

|

||||

|

||||

!!! tip "Editing your most recent message"

|

||||

You can edit your most-recent message by pushing the up arrow, make your edits, and then push `Enter` to save!

|

||||

|

||||

## How do I suggest something?

|

||||

|

||||

1. Find the #completed channel (*under the **Suggestions** category*), and confirm that your suggestion hasn't already been voted on.

|

||||

2. Find the #in-flight channel (*also under **Suggestions***), and confirm that your suggestion isn't already in-flight (*but not completed yet*)

|

||||

3. In any channel, type `!suggest [your suggestion goes here]`. A post will be created in #in-flight for other users to vote on your suggestion. Suggestions change color as more users vote on them.

|

||||

4. When your suggestion is completed (*or a decision has been made*), you'll receive a DM from carl-bot

|

||||

8

docs/community/discourse.md

Normal file

@@ -0,0 +1,8 @@

|

||||

---

|

||||

title: Let's discourse together about geeky subjects

|

||||

description: Funky Penguin's Discourse Forums serve our geeky communtiy, and consolidate comments and discussion from either the Geek Cookbook or the blog.

|

||||

---

|

||||

# Discourse

|

||||

|

||||

If you're not into the new-fangled microblogging of Mastodon, or realtime chatting of Discord, can still party with us like it's 2001, using our Discourse forums (*this is also how all the recipe comments work*).

|

||||

|

||||

3

docs/community/github.md

Normal file

@@ -0,0 +1,3 @@

|

||||

# GitHub

|

||||

|

||||

You've found an intentionally un-linked page! This page is under construction, and will be up shortly. In the meantime, head to <https://github.com/geek-cookbook/geek-cookbook>!

|

||||

17

docs/community/index.md

Normal file

@@ -0,0 +1,17 @@

|

||||

---

|

||||

title: Funky Penguin's Geeky Communities

|

||||

description: Engage with your fellow geeks, wherever they may be!

|

||||

---

|

||||

|

||||

# Geek Community

|

||||

|

||||

Looking for friends / compatriates?

|

||||

|

||||

Find details about our communities below:

|

||||

|

||||

* [Discord](/community/discord/) - Realtime chat, multiple channels

|

||||

* [Reddit](/community/reddit/) - Geek out old-skool

|

||||

* [Mastodon](/community/mastodon/) - Federated, open-source microblogging platform

|

||||

* [Discourse](https://forum.funkypenguin.co.nz) - Forums - asyncronous communition

|

||||

* [GitHub](https://github.com/funkypenguin/) - Issues and PRs

|

||||

* [Facebook](https://www.facebook.com/funkypenguinnz/) - Social networking for old-timers!

|

||||

47

docs/community/mastodon.md

Normal file

@@ -0,0 +1,47 @@

|

||||

---

|

||||

title: Join our geeky, Docker/Kubernetes-flavored Mastdon instance

|

||||

description: Looking for your geeky niche in the "fediverse"? Join our Mastodon instance!

|

||||

icon: material/mastodon

|

||||

---

|

||||

# Toot me up, buttercup!

|

||||

|

||||

Mastondon is a self-hosted / open-source microblogging platform (*heavily inspired by Twitter*), which supports federation, rather than centralization. Like email, any user on any Mastodon instance can follow, "toot" (*not tweet!*), and reply to any user on any *other* instance.

|

||||

|

||||

Our community Mastodon server is sooo [FKNY](https://so.fnky.nz/web/directory), but if you're already using Mastodon on another server (*or your [own instance][mastodon]*), you can seamlessly interact with us from there too, thanks to the magic of federation!

|

||||

|

||||

!!! question "This is dumb, there's nobody here"

|

||||

|

||||

* Give it time. The first time you get a federated reply from someone on another instance, it just "clicks" (*at least, it did for me*)

|

||||

* Follow some folks (I'm [funkypenguin@so.fnky.nz](https://so.fnky.nz/@funkypenguin))

|

||||

* Install [mobile client](https://joinmastodon.org/apps)

|

||||

|

||||

## How do I Mastodon?

|

||||

|

||||

1. If you're a [sponsor][github_sponsor], check the special `#mastodon` channel in [Discord][discord]. You'll find a super-sekrit invite URL which will get you setup *instantly*

|

||||

2. If you're *not* a sponsor, go to [FNKY](https://so.fnky.nz), and request an invite (*invites must be approved to prevent abuse*) - mention your [Discord][discord] username in the "Why do you want to join?" question.

|

||||

3. Start tootin'!

|

||||

|

||||

## So who do I follow?

|

||||

|

||||

That.. is tricky. There's no big-tech algorithm to suggest friends based on previously-collected browsing / tracking data, you'll have to build your "social graph" from the ground up, one "brick" at a time. Start by following [me](https://so.fnky.nz/@funkypenguin). Here are some more helpful links:

|

||||

|

||||

* [A self-curated list of accounts, sorted by theme](https://communitywiki.org/trunk)

|

||||

* [Reddit thread #1](https://www.reddit.com/r/Mastodon/comments/enr4ud/who_to_follow_on_mastodon/)

|

||||

* [Reddit thread #2](https://www.reddit.com/r/Mastodon/comments/p6vpvq/wanted_positive_mastodon_accounts_to_follow/)

|

||||

* [Reddit thread #3](https://www.reddit.com/r/Mastodon/comments/s0ly2r/new_user_how_do_i_find_people_to_follow/)

|

||||

* [Reddit thread #4](https://www.reddit.com/r/Mastodon/comments/ublg4q/is_it_possible_to_follow_accounts_from_different/)

|

||||

* [Masto.host's FAQ on finding people to follow](https://masto.host/finding-people-to-follow-on-mastodon/)

|

||||

|

||||

## Code of Conduct

|

||||

|

||||

With the goal of creating a safe and inclusive community, we've adopted the [Contributor Covenant Code of Conduct](https://www.contributor-covenant.org/), as described [here](/community/code-of-conduct/). This code of conduct applies to the Mastodon server.

|

||||

|

||||

### Reporting abuse

|

||||

|

||||

To report a violation of our code of conduct in our Mastodon server, type use Mastodon's "report" function to report a violation, as illustrated below:

|

||||

|

||||

{ loading=lazy }

|

||||

|

||||

Moderators will be alerted to your report, who will address the issue as detailed in the [enforcement guidelines](/community/code-of-conduct/#enforcement-guidelines).

|

||||

|

||||

--8<-- "common-links.md"

|

||||

26

docs/community/reddit.md

Normal file

@@ -0,0 +1,26 @@

|

||||

---

|

||||

title: Funky Penguin's Subreddit

|

||||

description: If you're a redditor, jump on over to our subreddit at https://www.reddit.com/r/funkypenguin to engage / share the latest!

|

||||

icon: material/reddit

|

||||

---

|

||||

|

||||

# Reddit

|

||||

|

||||

If you're a redditor, jump on over to our subreddit ([r/funkypenguin](https://www.reddit.com/r/funkypenguin/)), to engage / share the latest!

|

||||

|

||||

## How do I join the subreddit?

|

||||

|

||||

1. If you're not already a member, [create](https://www.reddit.com/register/) a Reddit account

|

||||

2. [Subscribe](https://www.reddit.com/r/funkypenguin/) to r/funkypenguin

|

||||

|

||||

## Code of Conduct

|

||||

|

||||

With the goal of creating a safe and inclusive community, we've adopted the [Contributor Covenant Code of Conduct](https://www.contributor-covenant.org/), as described [here](/community/code-of-conduct/).

|

||||

|

||||

### Reporting abuse

|

||||

|

||||

To report a violation of our code of conduct in our subreddit, use the "Report" button as illustrated below:

|

||||

|

||||

{ loading=lazy }

|

||||

|

||||

The reported message will be highlighted to moderators, who will address the issue as detailed in the [enforcement guidelines](/community/code-of-conduct/#enforcement-guidelines).

|

||||

280

docs/docker-swarm/authelia.md

Normal file

@@ -0,0 +1,280 @@

|

||||

---

|

||||

title: Using Authelia to secure services in Docker

|

||||

description: Authelia is an open-source authentication and authorization server providing 2-factor authentication and single sign-on (SSO) for your applications via a web portal.

|

||||

---

|

||||

|

||||

# Authelia in Docker Swarm

|

||||

|

||||

[Authelia](https://github.com/authelia/authelia) is an open-source authentication and authorization server providing 2-factor authentication and single sign-on (SSO) for your applications via a web portal. Like [Traefik Forward Auth][tfa], Authelia acts as a companion of reverse proxies like Nginx, [Traefik](/docker-swarm/traefik/), or HAProxy to let them know whether queries should pass through. Unauthenticated users are redirected to Authelia Sign-in portal instead. Authelia is a popular alternative to a heavyweight such as [KeyCloak][keycloak].

|

||||

|

||||

{ loading=lazy }

|

||||

|

||||

Features include

|

||||

|

||||

* Multiple two-factor methods such as

|

||||

* [Physical Security Key](https://www.authelia.com/docs/features/2fa/security-key) (Yubikey)

|

||||

* OTP using Google Authenticator

|

||||

* Mobile Notifications

|

||||

* Lockout users after too many failed login attempts

|

||||

* Highly Customizable Access Control using rules to match criteria such as subdomain, username, groups the user is in, and Network

|

||||

* Authelia [Community](https://discord.authelia.com/) Support

|

||||

* Full list of features can be viewed [here](https://www.authelia.com/docs/features/)

|

||||

|

||||

## Authelia requirements

|

||||

|

||||

!!! summary "Ingredients"

|

||||

Already deployed:

|

||||

|

||||

* [X] [Docker swarm cluster](/docker-swarm/design/) with [persistent shared storage](/docker-swarm/shared-storage-ceph/)

|

||||

* [X] [Traefik](/docker-swarm/traefik/) configured per design

|

||||

|

||||

New:

|

||||

|

||||

* [ ] DNS entry for your auth host (*"authelia.yourdomain.com" is a good choice*), pointed to your [keepalived](/docker-swarm/keepalived/) IP

|

||||

|

||||

### Setup data locations

|

||||

|

||||

First, we create a directory to hold the data which authelia will serve:

|

||||

|

||||

```bash

|

||||

mkdir /var/data/config/authelia

|

||||

```

|

||||

|

||||

### Create Authelia config file

|

||||

|

||||

Authelia configurations are defined in `/var/data/config/authelia/configuration.yml`. Some are required and some are optional. The following is a variation of the default example config file. Optional configuration settings can be viewed on in [Authelia's documentation](https://www.authelia.com/docs/configuration/)

|

||||

|

||||

!!! warning

|

||||

Your variables may vary significantly from what's illustrated below, and it's best to read up and understand exactly what each option does.

|

||||

|

||||

```yaml title="/var/data/config/authelia/configuration.yml"

|

||||

###############################################################

|

||||

# Authelia configuration #

|

||||

###############################################################

|

||||

|

||||

server:

|

||||

host: 0.0.0.0

|

||||

port: 9091

|

||||

|

||||

log:

|

||||

level: warn

|

||||

|

||||

# This secret can also be set using the env variables AUTHELIA_JWT_SECRET_FILE

|

||||

# I used this site to generate the secret: https://www.grc.com/passwords.htm

|

||||

jwt_secret: SECRET_GOES_HERE

|

||||

|

||||

# https://docs.authelia.com/configuration/miscellaneous.html#default-redirection-url

|

||||

default_redirection_url: https://authelia.example.com

|

||||

|

||||

totp:

|

||||

issuer: authelia.example.com

|

||||

period: 30

|

||||

skew: 1

|

||||

|

||||

authentication_backend:

|

||||

file:

|

||||

path: /config/users_database.yml

|

||||

# customize passwords based on https://docs.authelia.com/configuration/authentication/file.html

|

||||

password:

|

||||

algorithm: argon2id

|

||||

iterations: 1

|

||||

salt_length: 16

|

||||

parallelism: 8

|

||||

memory: 1024 # blocks this much of the RAM. Tune this.

|

||||

|

||||

# https://docs.authelia.com/configuration/access-control.html

|

||||

access_control:

|

||||

default_policy: one_factor

|

||||

rules:

|

||||

- domain: "bitwarden.example.com"

|

||||

policy: two_factor

|

||||

|

||||

- domain: "whoami-authelia-2fa.example.com"

|

||||

policy: two_factor

|

||||

|

||||

- domain: "*.example.com" # (1)!

|

||||

policy: one_factor

|

||||

|

||||

|

||||

session:

|

||||

name: authelia_session

|

||||

# This secret can also be set using the env variables AUTHELIA_SESSION_SECRET_FILE

|

||||

# Used a different secret, but the same site as jwt_secret above.

|

||||

secret: SECRET_GOES_HERE

|

||||

expiration: 3600 # 1 hour

|

||||

inactivity: 300 # 5 minutes

|

||||

domain: example.com # Should match whatever your root protected domain is

|

||||

|

||||

regulation:

|

||||

max_retries: 3

|

||||

find_time: 120

|

||||

ban_time: 300

|

||||

|

||||

storage:

|

||||

encryption_key: SECRET_GOES_HERE_20_CHARACTERS_OR_LONGER

|

||||

local:

|

||||

path: /config/db.sqlite3

|

||||

|

||||

|

||||

notifier:

|

||||

# smtp:

|

||||

# username: SMTP_USERNAME

|

||||

# # This secret can also be set using the env variables AUTHELIA_NOTIFIER_SMTP_PASSWORD_FILE

|

||||

# # password: # use docker secret file instead AUTHELIA_NOTIFIER_SMTP_PASSWORD_FILE

|

||||

# host: SMTP_HOST

|

||||

# port: 587 #465

|

||||

# sender: batman@example.com # customize for your setup

|

||||

|

||||

# For testing purpose, notifications can be sent in a file. Be sure map the volume in docker-compose.

|

||||

filesystem:

|

||||

filename: /config/notification.txt

|

||||

```

|

||||

|

||||

1. The wildcard rule must go last, since the first rule to match the request, wins

|

||||

|

||||

### Create Authelia user Accounts

|

||||

|

||||

Create `/var/data/config/authelia/users_database.yml` this will be where we can create user accounts and give them groups

|

||||

|

||||

```yaml title="/var/data/config/authelia/users_database.yml"

|

||||

# To create a hashed password you can run the following command:

|

||||

# `docker run authelia/authelia:latest authelia hash-password YOUR_PASSWORD``

|

||||

users:

|

||||

batman: # each new user should be defined in a dictionary like this

|

||||

displayname: "Batman"

|

||||

# replace this with your hashed password. This one, for the purposes of testing, is "password"

|

||||

password: "$argon2id$v=19$m=65536,t=3,p=4$cW1adlh3UjhIRE9zSmZyZw$xA4S2X8BjE7LVb4NndJCZnoyHgON5w3FopO4vw5AQxE"

|

||||

email: batman@example.com

|

||||

groups:

|

||||

- admins

|

||||

- dev

|

||||

```

|

||||

|

||||

To create a hashed password you can run the following command

|

||||

`docker run authelia/authelia:latest authelia hash-password YOUR_PASSWORD`

|

||||

|

||||

### Authelia Docker Swarm config

|

||||

|

||||

Create a docker swarm config file in docker-compose syntax (v3), something like this example:

|

||||

|

||||

--8<-- "premix-cta.md"

|

||||

|

||||

```yaml title="/var/data/config/authelia/authelia.yml"

|

||||

version: "3.2"

|

||||

|

||||

services:

|

||||

authelia:

|

||||

image: authelia/authelia

|

||||

volumes:

|

||||

- /var/data/config/authelia:/config

|

||||

networks:

|

||||

- traefik_public

|

||||

deploy:

|

||||

labels:

|

||||

# traefik common

|

||||

- traefik.enable=true

|

||||

- traefik.docker.network=traefik_public

|

||||

|

||||

# traefikv1

|

||||

- traefik.frontend.rule=Host:authelia.example.com

|

||||

- traefik.port=80

|

||||

- 'traefik.frontend.auth.forward.address=http://authelia:9091/api/verify?rd=https://authelia.example.com/'

|

||||

- 'traefik.frontend.auth.forward.trustForwardHeader=true'

|

||||

- 'traefik.frontend.auth.forward.authResponseHeaders=Remote-User,Remote-Groups,Remote-Name,Remote-Email'

|

||||

|

||||

# traefikv2

|

||||

- "traefik.http.routers.authelia.rule=Host(`authelia.example.com`)"

|

||||

- "traefik.http.routers.authelia.entrypoints=https"

|

||||

- "traefik.http.services.authelia.loadbalancer.server.port=9091"

|

||||

|

||||

whoami-1fa: # (1)!

|

||||

image: containous/whoami

|

||||

networks:

|

||||

- traefik_public

|

||||

deploy:

|

||||

labels:

|

||||

# traefik

|

||||

- "traefik.enable=true"

|

||||

- "traefik.docker.network=traefik_public"

|

||||

|

||||

# traefikv1

|

||||

- "traefik.frontend.rule=Host:whoami-authelia-1fa.example.com"

|

||||

- traefik.port=80

|

||||

- 'traefik.frontend.auth.forward.address=http://authelia:9091/api/verify?rd=https://authelia.example.com/'

|

||||

- 'traefik.frontend.auth.forward.trustForwardHeader=true'

|

||||

- 'traefik.frontend.auth.forward.authResponseHeaders=Remote-User,Remote-Groups,Remote-Name,Remote-Email'

|

||||

|

||||

# traefikv2

|

||||

- "traefik.http.routers.whoami-authelia-1fa.rule=Host(`whoami-authelia-1fa.example.com`)"

|

||||

- "traefik.http.routers.whoami-authelia-1fa.entrypoints=https"

|

||||

- "traefik.http.routers.whoami-authelia-1fa.middlewares=authelia"

|

||||

- "traefik.http.services.whoami-authelia-1fa.loadbalancer.server.port=80"

|

||||

|

||||

|

||||

whoami-2fa: # (2)!

|

||||

image: containous/whoami

|

||||

networks:

|

||||

- traefik_public

|

||||

deploy:

|

||||

labels:

|

||||

# traefik

|

||||

- "traefik.enable=true"

|

||||

- "traefik.docker.network=traefik_public"

|

||||

|

||||

# traefikv1

|

||||

- "traefik.frontend.rule=Host:whoami-authelia-2fa.example.com"

|

||||

- traefik.port=80

|

||||

- 'traefik.frontend.auth.forward.address=http://authelia:9091/api/verify?rd=https://authelia.example.com/'

|

||||

- 'traefik.frontend.auth.forward.trustForwardHeader=true'

|

||||

- 'traefik.frontend.auth.forward.authResponseHeaders=Remote-User,Remote-Groups,Remote-Name,Remote-Email'

|

||||

|

||||

# traefikv2

|

||||

- "traefik.http.routers.whoami-authelia-2fa.rule=Host(`whoami-authelia-2fa.example.com`)"

|

||||

- "traefik.http.routers.whoami-authelia-2fa.entrypoints=https"

|

||||

- "traefik.http.routers.whoami-authelia-2fa.middlewares=authelia"

|

||||

- "traefik.http.services.whoami-authelia-2fa.loadbalancer.server.port=80"

|

||||

|

||||

networks:

|

||||

traefik_public:

|

||||

external: true

|

||||

```

|

||||

|

||||

1. Optionally used to test 1FA authentication

|

||||

2. Optionally used to test 2FA authentication

|

||||

|

||||

|

||||

|

||||

!!! question "Why not just use Traefik Forward Auth?"

|

||||

While [Traefik Forward Auth][tfa] is a very lightweight, minimal authentication layer, which provides OIDC-based authentication, Authelia provides more features such as multiple methods of authentication (*Hardware, OTP, Email*), advanced rules, and push notifications.

|

||||

|

||||

## Run Authelia

|

||||

|

||||

Launch the Authelia stack by running ```docker stack deploy authelia -c <path -to-docker-compose.yml>```

|

||||

|

||||

### Test Authelia

|

||||

|

||||

To test the service works successfully, try logging into Authelia itself first, as a user whose password you've setup in `/var/data/config/authelia/users_database.yml`.

|

||||

|

||||

You'll notice that upon successful login, you're requested to setup 2FA. If (*like me!*) you didn't configure an SMTP server, you can still setup 2FA (*TOTP or webauthn*), and the setup link email instructions should be found in `/var/data/config/authelia/notifications.txt`

|

||||

|

||||

Now you're ready to test 1FA and 2FA auth, against the two "whoami" services defined in the docker-compose file.

|

||||

|

||||

Try to access each in turn, and confirm that you're _not_ prompted for 2FA on whoami-authelia-1fa, but you _are_ prompted for 2FA on whoami-authelia-2fa! :thumbsup:

|

||||

|

||||

## Summary

|

||||

|

||||

What have we achieved? By adding a simple label to any service, we can secure any service behind our Authelia, with minimal processing / handling overhead, and benefit from the 1FA/2FA multi-layered features provided by Autheila.

|

||||

|

||||

!!! summary "Summary"

|

||||

Created:

|

||||

|

||||

* [X] Authelia configured and available to provide a layer of authentication to other services deployed in the stack

|

||||

|

||||

### Authelia vs Keycloak

|

||||

|

||||

[KeyCloak][keycloak] is the "big daddy" of self-hosted authentication platforms - it has a beautiful GUI, and a very advanced and mature featureset. Like Authelia, KeyCloak can [use an LDAP server](/recipes/keycloak/authenticate-against-openldap/) as a backend, but _unlike_ Authelia, KeyCloak allows for 2-way sync between that LDAP backend, meaning KeyCloak can be used to _create_ and _update_ the LDAP entries (*Authelia's is just a one-way LDAP lookup - you'll need another tool to actually administer your LDAP database*).

|

||||

|

||||

|

||||

[^1]: The initial inclusion of Authelia was due to the efforts of @bencey in Discord (Thanks Ben!)

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

97

docs/docker-swarm/design.md

Normal file

@@ -0,0 +1,97 @@

|

||||

---

|

||||

title: Design a secure, scalable Docker Swarm

|

||||

description: Presenting a Docker Swarm design to create your own container-hosting platform, which is highly-available, scalable, portable, secure and automated! 💪

|

||||

---

|

||||

|

||||

# Highly Available Docker Swarm Design

|

||||

|

||||

In the design described below, our "private cloud" platform is:

|

||||

|

||||

* **Highly-available** (_can tolerate the failure of a single component_)

|

||||

* **Scalable** (_can add resource or capacity as required_)

|

||||

* **Portable** (_run it on your garage server today, run it in AWS tomorrow_)

|

||||

* **Secure** (_access protected with [LetsEncrypt certificates](/docker-swarm/traefik/) and optional [OIDC with 2FA](/docker-swarm/traefik-forward-auth/)_)

|

||||

* **Automated** (_requires minimal care and feeding_)

|

||||

|

||||

## Design Decisions

|

||||

|

||||

### Where possible, services will be highly available.**

|

||||

|

||||

This means that:

|

||||

|

||||

* At least 3 docker swarm manager nodes are required, to provide fault-tolerance of a single failure.

|

||||

* [Ceph](/docker-swarm/shared-storage-ceph/) is employed for share storage, because it too can be made tolerant of a single failure.

|

||||

|

||||

!!! note

|

||||

An exception to the 3-nodes decision is running a single-node configuration. If you only **have** one node, then obviously your swarm is only as resilient as that node. It's still a perfectly valid swarm configuration, ideal for starting your self-hosting journey. In fact, under the single-node configuration, you don't need ceph either, and you can simply use the local volume on your host for storage. You'll be able to migrate to ceph/more nodes if/when you expand.

|

||||

|

||||

**Where multiple solutions to a requirement exist, preference will be given to the most portable solution.**

|

||||

|

||||

This means that:

|

||||

|

||||

* Services are defined using docker-compose v3 YAML syntax

|

||||

* Services are portable, meaning a particular stack could be shut down and moved to a new provider with minimal effort.

|

||||

|

||||

## Security

|

||||

|

||||

Under this design, the only inbound connections we're permitting to our docker swarm in a **minimal** configuration (*you may add custom services later, like UniFi Controller*) are:

|

||||

|

||||

### Network Flows

|

||||

|

||||

* **HTTP (TCP 80)** : Redirects to https

|

||||

* **HTTPS (TCP 443)** : Serves individual docker containers via SSL-encrypted reverse proxy

|

||||

|

||||

### Authentication

|

||||

|

||||

* Where the hosted application provides a trusted level of authentication (*i.e., [NextCloud](/recipes/nextcloud/)*), or where the application requires public exposure (*i.e. [Privatebin](/recipes/privatebin/)*), no additional layer of authentication will be required.

|

||||

* Where the hosted application provides inadequate (*i.e. [NZBGet](/recipes/autopirate/nzbget/)*) or no authentication (*i.e. [Gollum](/recipes/gollum/)*), a further authentication against an OAuth provider will be required.

|

||||

|

||||

## High availability

|

||||

|

||||

### Normal function

|

||||

|

||||

Assuming a 3-node configuration, under normal circumstances the following is illustrated:

|

||||

|

||||

* All 3 nodes provide shared storage via Ceph, which is provided by a docker container on each node.

|

||||

* All 3 nodes participate in the Docker Swarm as managers.

|

||||

* The various containers belonging to the application "stacks" deployed within Docker Swarm are automatically distributed amongst the swarm nodes.

|

||||

* Persistent storage for the containers is provide via cephfs mount.

|

||||

* The **traefik** service (*in swarm mode*) receives incoming requests (*on HTTP and HTTPS*), and forwards them to individual containers. Traefik knows the containers names because it's able to read the docker socket.

|

||||

* All 3 nodes run keepalived, at varying priorities. Since traefik is running as a swarm service and listening on TCP 80/443, requests made to the keepalived VIP and arriving at **any** of the swarm nodes will be forwarded to the traefik container (*no matter which node it's on*), and then onto the target backend.

|

||||

|

||||

{ loading=lazy }

|

||||

|

||||

### Node failure

|

||||

|

||||

In the case of a failure (or scheduled maintenance) of one of the nodes, the following is illustrated:

|

||||

|

||||

* The failed node no longer participates in Ceph, but the remaining nodes provide enough fault-tolerance for the cluster to operate.

|

||||

* The remaining two nodes in Docker Swarm achieve a quorum and agree that the failed node is to be removed.

|

||||

* The (*possibly new*) leader manager node reschedules the containers known to be running on the failed node, onto other nodes.

|

||||

* The **traefik** service is either restarted or unaffected, and as the backend containers stop/start and change IP, traefik is aware and updates accordingly.

|

||||

* The keepalived VIP continues to function on the remaining nodes, and docker swarm continues to forward any traffic received on TCP 80/443 to the appropriate node.

|

||||

|

||||

{ loading=lazy }

|

||||

|

||||

### Node restore

|

||||

|

||||

When the failed (*or upgraded*) host is restored to service, the following is illustrated:

|

||||

|

||||

* Ceph regains full redundancy

|

||||

* Docker Swarm managers become aware of the recovered node, and will use it for scheduling **new** containers

|

||||

* Existing containers which were migrated off the node are not migrated backend

|

||||

* Keepalived VIP regains full redundancy

|

||||

|

||||

{ loading=lazy }

|

||||

|

||||

### Total cluster failure

|

||||

|

||||

A day after writing this, my environment suffered a fault whereby all 3 VMs were unexpectedly and simultaneously powered off.

|

||||

|

||||

Upon restore, docker failed to start on one of the VMs due to local disk space issue[^1]. However, the other two VMs started, established the swarm, mounted their shared storage, and started up all the containers (services) which were managed by the swarm.

|

||||

|

||||

In summary, although I suffered an **unplanned power outage to all of my infrastructure**, followed by a **failure of a third of my hosts**... ==all my platforms are 100% available[^1] with **absolutely no manual intervention**==.

|

||||

|

||||

[^1]: Since there's no impact to availability, I can fix (or just reinstall) the failed node whenever convenient.

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

183

docs/docker-swarm/docker-swarm-mode.md

Normal file

@@ -0,0 +1,183 @@

|

||||

---

|

||||

title: Enable Docker Swarm mode

|

||||

description: For truly highly-available services with Docker containers, Docker Swarm is the simplest way to achieve redundancy, such that a single docker host could be turned off, and none of our services will be interrupted.

|

||||

---

|

||||

|

||||

# Docker Swarm Mode

|

||||

|

||||

For truly highly-available services with Docker containers, we need an orchestration system. Docker Swarm (*as defined at 1.13*) is the simplest way to achieve redundancy, such that a single docker host could be turned off, and none of our services will be interrupted.

|

||||

|

||||

## Ingredients

|

||||

|

||||

!!! summary

|

||||

Existing

|

||||

|

||||

* [X] 3 x nodes (*bare-metal or VMs*), each with:

|

||||

* A mainstream Linux OS (*tested on either [CentOS](https://www.centos.org) 7+ or [Ubuntu](http://releases.ubuntu.com) 16.04+*)

|

||||

* At least 2GB RAM

|

||||

* At least 20GB disk space (_but it'll be tight_)

|

||||

* [X] Connectivity to each other within the same subnet, and on a low-latency link (_i.e., no WAN links_)

|

||||

|

||||

## Preparation

|

||||

|

||||

### Bash auto-completion

|

||||

|

||||

Add some handy bash auto-completion for docker. Without this, you'll get annoyed that you can't autocomplete ```docker stack deploy <blah> -c <blah.yml>``` commands.

|

||||

|

||||

```bash

|

||||

cd /etc/bash_completion.d/

|

||||

curl -O https://raw.githubusercontent.com/docker/cli/b75596e1e4d5295ac69b9934d1bd8aff691a0de8/contrib/completion/bash/docker

|

||||

```

|

||||

|

||||

Install some useful bash aliases on each host

|

||||

|

||||

```bash

|

||||

cd ~

|

||||

curl -O https://raw.githubusercontent.com/funkypenguin/geek-cookbook/master/examples/scripts/gcb-aliases.sh

|

||||

echo 'source ~/gcb-aliases.sh' >> ~/.bash_profile

|

||||

```

|

||||

|

||||

## Serving

|

||||

|

||||

### Release the swarm!

|

||||

|

||||

Now, to launch a swarm. Pick a target node, and run `docker swarm init`

|

||||

|

||||

Yeah, that was it. Seriously. Now we have a 1-node swarm.

|

||||

|

||||

```bash

|

||||

[root@ds1 ~]# docker swarm init

|

||||

Swarm initialized: current node (b54vls3wf8xztwfz79nlkivt8) is now a manager.

|

||||

|

||||

To add a worker to this swarm, run the following command:

|

||||

|

||||

docker swarm join \

|

||||

--token SWMTKN-1-2orjbzjzjvm1bbo736xxmxzwaf4rffxwi0tu3zopal4xk4mja0-bsud7xnvhv4cicwi7l6c9s6l0 \

|

||||

202.170.164.47:2377

|

||||

|

||||

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

|

||||

|

||||

[root@ds1 ~]#

|

||||

```

|

||||

|

||||

Run `docker node ls` to confirm that you have a 1-node swarm:

|

||||

|

||||

```bash

|

||||

[root@ds1 ~]# docker node ls

|

||||

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

|

||||

b54vls3wf8xztwfz79nlkivt8 * ds1.funkypenguin.co.nz Ready Active Leader

|

||||

[root@ds1 ~]#

|

||||

```

|

||||

|

||||

Note that when you run `docker swarm init` above, the CLI output gives youe a command to run to join further nodes to my swarm. This command would join the nodes as __workers__ (*as opposed to __managers__*). Workers can easily be promoted to managers (*and demoted again*), but since we know that we want our other two nodes to be managers too, it's simpler just to add them to the swarm as managers immediately.

|

||||

|

||||

On the first swarm node, generate the necessary token to join another manager by running ```docker swarm join-token manager```:

|

||||

|

||||

```bash

|

||||

[root@ds1 ~]# docker swarm join-token manager

|

||||

To add a manager to this swarm, run the following command:

|

||||

|

||||

docker swarm join \

|

||||

--token SWMTKN-1-2orjbzjzjvm1bbo736xxmxzwaf4rffxwi0tu3zopal4xk4mja0-cfm24bq2zvfkcwujwlp5zqxta \

|

||||

202.170.164.47:2377

|

||||

|

||||

[root@ds1 ~]#

|

||||

```

|

||||

|

||||

Run the command provided on your other nodes to join them to the swarm as managers. After addition of a node, the output of ```docker node ls``` (on either host) should reflect all the nodes:

|

||||

|

||||

```bash

|

||||

[root@ds2 davidy]# docker node ls

|

||||

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

|

||||

b54vls3wf8xztwfz79nlkivt8 ds1.funkypenguin.co.nz Ready Active Leader

|

||||

xmw49jt5a1j87a6ihul76gbgy * ds2.funkypenguin.co.nz Ready Active Reachable

|

||||

[root@ds2 davidy]#

|

||||

```

|

||||

|

||||

### Setup automated cleanup

|

||||

|

||||

Docker swarm doesn't do any cleanup of old images, so as you experiment with various stacks, and as updated containers are released upstream, you'll soon find yourself loosing gigabytes of disk space to old, unused images.

|

||||

|

||||

To address this, we'll run the "[meltwater/docker-cleanup](https://github.com/meltwater/docker-cleanup)" container on all of our nodes. The container will clean up unused images after 30 minutes.

|

||||

|

||||

First, create `docker-cleanup.env` (_mine is under `/var/data/config/docker-cleanup`_), and exclude container images we **know** we want to keep:

|

||||

|

||||

```bash

|

||||

KEEP_IMAGES=traefik,keepalived,docker-mailserver

|

||||

DEBUG=1

|

||||

```

|

||||

|

||||

Then create a docker-compose.yml as per the following example:

|

||||

|

||||

```yaml

|

||||

version: "3"

|

||||

|

||||

services:

|

||||

docker-cleanup:

|

||||

image: meltwater/docker-cleanup:latest

|

||||

volumes:

|

||||

- /var/run/docker.sock:/var/run/docker.sock

|

||||

- /var/lib/docker:/var/lib/docker

|

||||

networks:

|

||||

- internal

|

||||

deploy:

|

||||

mode: global

|

||||

env_file: /var/data/config/docker-cleanup/docker-cleanup.env

|

||||

|

||||

networks:

|

||||

internal:

|

||||

driver: overlay

|

||||

ipam:

|

||||

config:

|

||||

- subnet: 172.16.0.0/24

|

||||

```

|

||||

|

||||

--8<-- "reference-networks.md"

|

||||

|

||||

Launch the cleanup stack by running ```docker stack deploy docker-cleanup -c <path-to-docker-compose.yml>```

|

||||

|

||||

### Setup automatic updates

|

||||

|

||||

If your swarm runs for a long time, you might find yourself running older container images, after newer versions have been released. If you're the sort of geek who wants to live on the edge, configure [shepherd](https://github.com/djmaze/shepherd) to auto-update your container images regularly.

|

||||

|

||||

Create `/var/data/config/shepherd/shepherd.env` as per the following example:

|

||||

|

||||

```bash

|

||||

# Don't auto-update Plex or Emby (or Jellyfin), I might be watching a movie! (Customize this for the containers you _don't_ want to auto-update)

|

||||

BLACKLIST_SERVICES="plex_plex emby_emby jellyfin_jellyfin"

|

||||

# Run every 24 hours. Note that SLEEP_TIME appears to be in seconds.

|

||||

SLEEP_TIME=86400

|

||||

```

|

||||

|

||||

Then create /var/data/config/shepherd/shepherd.yml as per the following example:

|

||||

|

||||

```yaml

|

||||

version: "3"

|

||||

|

||||

services:

|

||||

shepherd-app:

|

||||

image: mazzolino/shepherd

|

||||

env_file : /var/data/config/shepherd/shepherd.env

|

||||

volumes:

|

||||

- /var/run/docker.sock:/var/run/docker.sock:ro

|

||||

labels:

|

||||

- "traefik.enable=false"

|

||||

deploy:

|

||||

placement:

|

||||

constraints: [node.role == manager]

|

||||

```

|

||||

|

||||

Launch shepherd by running ```docker stack deploy shepherd -c /var/data/config/shepherd/shepherd.yml```, and then just forget about it, comfortable in the knowledge that every day, Shepherd will check that your images are the latest available, and if not, will destroy and recreate the container on the latest available image.

|

||||

|

||||

## Summary

|

||||

|

||||

--8<-- "5-min-install.md"

|

||||

|

||||

What have we achieved?

|

||||

|

||||

!!! summary "Summary"

|

||||

Created:

|

||||

|

||||

* [X] [Docker swarm cluster](/docker-swarm/design/)

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

29

docs/docker-swarm/index.md

Normal file

@@ -0,0 +1,29 @@

|

||||

---

|

||||

title: Why use Docker Swarm?

|

||||

description: Using Docker Swarm to build your own container-hosting platform which is highly-available, scalable, portable, secure and automated! 💪

|

||||

---

|

||||

|

||||

# Why Docker Swarm?

|

||||

|

||||

Pop quiz, hotshot.. There's a server with containers on it. Once you run enough containers, you start to loose track of compose files / data. If the host fails, all your services are unavailable. What do you do? **WHAT DO YOU DO**?[^1]

|

||||

|

||||

<iframe width="560" height="315" src="https://www.youtube.com/embed/Ug2hLQv6WeY" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

|

||||

|

||||

You too, action-geek, can save the day, by...

|

||||

|

||||

1. Enable [Docker Swarm mode](/docker-swarm/docker-swarm-mode/) (*even just on one node*)[^2]

|

||||

2. Store your swarm configuration and application data in an [orderly and consistent structure](/reference/data_layout/)

|

||||

3. Expose all your services consistently using [Traefik](/docker-swarm/traefik/) with optional [additional per-service authentication][tfa]

|

||||

|

||||

Then you can really level-up your geek-fu, by:

|

||||

|

||||

4. Making your Docker Swarm highly with [keepalived](/docker-swarm/keepalived/)

|

||||

5. Setup [shared storage](/docker-swarm/shared-storage-ceph/) to eliminate SPOFs

|

||||

6. [Backup](/recipes/duplicity/) your stuff automatically

|

||||

|

||||

Ready to enter the matrix? Jump in on one of the links above, or start reading the [design](/docker-swarm/design/)

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

|

||||

[^1]: This was an [iconic movie](https://www.imdb.com/title/tt0111257/). It even won 2 Oscars! (*but not for the acting*)

|

||||

[^2]: There are significant advantages to using Docker Swarm, even on just a single node.

|

||||

91

docs/docker-swarm/keepalived.md

Normal file

@@ -0,0 +1,91 @@

|

||||

---

|

||||

title: Make docker swarm HA with keepalived

|

||||

description: While having a self-healing, scalable docker swarm is great for availability and scalability, none of that is worth a sausage if nobody can connect to your cluster!

|

||||

---

|

||||

|

||||

# Keepalived

|

||||

|

||||

While having a self-healing, scalable docker swarm is great for availability and scalability, none of that is worth a sausage if nobody can connect to your cluster!

|

||||

|

||||

In order to provide seamless external access to clustered resources, regardless of which node they're on and tolerant of node failure, you need to present a single IP to the world for external access.

|

||||

|

||||

Normally this is done using a HA loadbalancer, but since Docker Swarm aready provides the load-balancing capabilities (*[routing mesh](https://docs.docker.com/engine/swarm/ingress/)*), all we need for seamless HA is a virtual IP which will be provided by more than one docker node.

|

||||

|

||||

This is accomplished with the use of keepalived on at least two nodes.

|

||||

|

||||

{ loading=lazy }

|

||||

|

||||

## Ingredients

|

||||

|

||||

!!! summary "Ingredients"

|

||||

Already deployed:

|

||||

|

||||

* [X] At least 2 x swarm nodes

|

||||

* [X] low-latency link (i.e., no WAN links)

|

||||

|

||||

New:

|

||||

|

||||

* [ ] At least 3 x IPv4 addresses (*one for each node and one for the virtual IP[^1])

|

||||

|

||||

## Preparation

|

||||

|

||||

### Enable IPVS module

|

||||

|

||||

On all nodes which will participate in keepalived, we need the "ip_vs" kernel module, in order to permit services to bind to non-local interface addresses.

|

||||

|

||||

Set this up once-off for both the primary and secondary nodes, by running:

|

||||

|

||||

```bash

|

||||

echo "modprobe ip_vs" >> /etc/modules

|

||||

modprobe ip_vs

|

||||

```

|

||||

|

||||

### Setup nodes

|

||||

|

||||

Assuming your IPs are as per the following example:

|

||||

|

||||

- 192.168.4.1 : Primary

|

||||

- 192.168.4.2 : Secondary

|

||||

- 192.168.4.3 : Virtual

|

||||

|

||||

Run the following on the primary

|

||||

|

||||

```bash

|

||||

docker run -d --name keepalived --restart=always \

|

||||

--cap-add=NET_ADMIN --cap-add=NET_BROADCAST --cap-add=NET_RAW --net=host \

|

||||

-e KEEPALIVED_UNICAST_PEERS="#PYTHON2BASH:['192.168.4.1', '192.168.4.2']" \

|

||||

-e KEEPALIVED_VIRTUAL_IPS=192.168.4.3 \

|

||||

-e KEEPALIVED_PRIORITY=200 \

|

||||

osixia/keepalived:2.0.20

|

||||

```

|

||||

|

||||

And on the secondary[^2]:

|

||||

|

||||

```bash

|

||||

docker run -d --name keepalived --restart=always \

|

||||

--cap-add=NET_ADMIN --cap-add=NET_BROADCAST --cap-add=NET_RAW --net=host \

|

||||

-e KEEPALIVED_UNICAST_PEERS="#PYTHON2BASH:['192.168.4.1', '192.168.4.2']" \

|

||||

-e KEEPALIVED_VIRTUAL_IPS=192.168.4.3 \

|

||||

-e KEEPALIVED_PRIORITY=100 \

|

||||

osixia/keepalived:2.0.20

|

||||

```

|

||||

|

||||

## Serving

|

||||

|

||||

That's it. Each node will talk to the other via unicast (*no need to un-firewall multicast addresses*), and the node with the highest priority gets to be the master. When ingress traffic arrives on the master node via the VIP, docker's routing mesh will deliver it to the appropriate docker node.

|

||||

|

||||

## Summary

|

||||

|

||||

What have we achieved?

|

||||

|

||||

!!! summary "Summary"

|

||||

Created:

|

||||

|

||||

* [X] A Virtual IP to which all cluster traffic can be forwarded externally, making it "*Highly Available*"

|

||||

|

||||

--8<-- "5-min-install.md"

|

||||

|

||||

[^1]: Some hosting platforms (*OpenStack, for one*) won't allow you to simply "claim" a virtual IP. Each node is only able to receive traffic targetted to its unique IP, unless certain security controls are disabled by the cloud administrator. In this case, keepalived is not the right solution, and a platform-specific load-balancing solution should be used. In OpenStack, this is Neutron's "Load Balancer As A Service" (LBAAS) component. AWS, GCP and Azure would likely include similar protections.

|

||||

[^2]: More than 2 nodes can participate in keepalived. Simply ensure that each node has the appropriate priority set, and the node with the highest priority will become the master.

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

80

docs/docker-swarm/nodes.md

Normal file

@@ -0,0 +1,80 @@

|

||||

---

|

||||

title: Setup nodes for docker-swarm

|

||||

description: Let's start building our cluster. You can use either bare-metal machines or virtual machines - the configuration would be the same. To avoid confusion, I'll be referring to these as "nodes" from now on.

|

||||

---

|

||||

# Nodes

|

||||

|

||||

Let's start building our cluster. You can use either bare-metal machines or virtual machines - the configuration would be the same. To avoid confusion, I'll be referring to these as "nodes" from now on.

|

||||

|

||||

!!! note

|

||||

In 2017, I **initially** chose the "[Atomic](https://www.projectatomic.io/)" CentOS/Fedora image for the swarm hosts, but later found its outdated version of Docker to be problematic with advanced features like GPU transcoding (in [Plex](/recipes/plex/)), [Swarmprom](/recipes/swarmprom/), etc. In the end, I went mainstream and simply preferred a modern Ubuntu installation.

|

||||

|

||||

## Ingredients

|

||||

|

||||

!!! summary "Ingredients"

|

||||

New in this recipe:

|

||||

|

||||

* [ ] 3 x nodes (*bare-metal or VMs*), each with:

|

||||

* A mainstream Linux OS (*tested on either [CentOS](https://www.centos.org) 7+ or [Ubuntu](http://releases.ubuntu.com) 16.04+*)

|

||||

* At least 2GB RAM

|

||||

* At least 20GB disk space (_but it'll be tight_)

|

||||

* [ ] Connectivity to each other within the same subnet, and on a low-latency link (_i.e., no WAN links_)

|

||||

|

||||

## Preparation

|

||||

|

||||

### Permit connectivity

|

||||

|

||||