mirror of

https://github.com/funkypenguin/geek-cookbook/

synced 2026-01-25 14:37:13 +00:00

Add markdown linting (without breaking the site this time!)

This commit is contained in:

8

.github/pull_request_template.md

vendored

8

.github/pull_request_template.md

vendored

@@ -14,9 +14,11 @@

|

||||

|

||||

## Types of changes

|

||||

<!--- What types of changes does your code introduce? Put an `x` in all the boxes that apply: -->

|

||||

<!-- ignore-task-list-start -->

|

||||

- [ ] Bug fix (non-breaking change which fixes an issue)

|

||||

- [ ] New feature (non-breaking change which adds functionality)

|

||||

- [ ] Breaking change (fix or feature that would cause existing functionality to change)

|

||||

<!-- ignore-task-list-end -->

|

||||

|

||||

## Checklist

|

||||

<!--- Go over all the following points, and put an `x` in all the boxes that apply. -->

|

||||

@@ -24,10 +26,14 @@

|

||||

|

||||

- [ ] I have read the [contribution guide](https://geek-cookbook.funkypenguin.co.nz/community/contribute/#contributing-recipes)

|

||||

- [ ] The format of my changes matches that of other recipes (*ideally it was copied from [template](/manuscript/recipes/template.md)*)

|

||||

- [ ] I've added at least one footnote to my recipe (*Chef's Notes*)

|

||||

|

||||

<!--

|

||||

delete these next checks if not adding a new recipe

|

||||

-->

|

||||

|

||||

### Recipe-specific checks

|

||||

|

||||

- [ ] I've added at least one footnote to my recipe (*Chef's Notes*)

|

||||

- [ ] I've updated `common_links.md` in the `_snippets` directory and sorted alphabetically

|

||||

- [ ] I've updated the navigation in `mkdocs.yaml` in alphabetical order

|

||||

- [ ] I've updated `CHANGELOG.md` in reverse chronological order order

|

||||

|

||||

19

.github/workflows/markdownlint.yml

vendored

Normal file

19

.github/workflows/markdownlint.yml

vendored

Normal file

@@ -0,0 +1,19 @@

|

||||

name: 'Lint Markdown'

|

||||

on:

|

||||

pull_request:

|

||||

types: [opened, synchronize]

|

||||

|

||||

jobs:

|

||||

lint-markdown:

|

||||

name: Lint markdown

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Check out code

|

||||

uses: actions/checkout@v2

|

||||

|

||||

- name: Lint markdown files

|

||||

uses: docker://avtodev/markdown-lint:v1 # fastest way

|

||||

with:

|

||||

config: '.markdownlint.yaml'

|

||||

args: '**/*.md'

|

||||

ignore: '_snippets' # multiple files must be separated with single space

|

||||

24

.github/workflows/mkdocs-build-sanity-check.yml

vendored

Normal file

24

.github/workflows/mkdocs-build-sanity-check.yml

vendored

Normal file

@@ -0,0 +1,24 @@

|

||||

name: 'mkdocs sanity check'

|

||||

on:

|

||||

pull_request:

|

||||

types: [opened, synchronize]

|

||||

|

||||

jobs:

|

||||

mkdocs-sanity-check:

|

||||

name: Check mkdocs builds successfully

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Check out code

|

||||

uses: actions/checkout@v2

|

||||

|

||||

- name: Set up Python 3.8

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: 3.8

|

||||

architecture: x64

|

||||

|

||||

- name: Install requirements

|

||||

run: python3 -m pip install -r requirements.txt

|

||||

|

||||

- name: Test mkdocs builds

|

||||

run: python3 -m mkdocs build

|

||||

6

.github/workflows/prune-stale-issues-prs.yml

vendored

6

.github/workflows/prune-stale-issues-prs.yml

vendored

@@ -10,6 +10,6 @@ jobs:

|

||||

- uses: actions/stale@v3

|

||||

with:

|

||||

repo-token: ${{ secrets.GITHUB_TOKEN }}

|

||||

stale-issue-message: 'This issue has gone mouldy, because it has been open 30 days with no activity. Remove stale label or comment or this will be closed in 5 days'

|

||||

days-before-stale: 30

|

||||

days-before-close: 5

|

||||

stale-issue-message: 'This issue has gone mouldy, because it has been open 90 days with no activity. Remove stale label or comment or this will be closed in 14 days'

|

||||

days-before-stale: 90

|

||||

days-before-close: 14

|

||||

@@ -1,9 +0,0 @@

|

||||

{

|

||||

"MD046": {

|

||||

"style": "fenced"

|

||||

},

|

||||

"MD013": false,

|

||||

"MD024": {

|

||||

"siblings_only": true

|

||||

}

|

||||

}

|

||||

17

.markdownlint.yaml

Normal file

17

.markdownlint.yaml

Normal file

@@ -0,0 +1,17 @@

|

||||

# What's this for? This file is used by the markdownlinting extension in VSCode, as well as the GitHub actions

|

||||

# See all rules at https://github.com/DavidAnson/markdownlint/blob/main/doc/Rules.md

|

||||

|

||||

# Ignore line length

|

||||

"MD013": false

|

||||

|

||||

# Allow multiple headings with the same content provided the headings are not "siblings"

|

||||

"MD024":

|

||||

"siblings_only": true

|

||||

|

||||

# Allow trailing punctuation in headings

|

||||

"MD026": false

|

||||

|

||||

# We use fenced code blocks, but this test conflicts with the admonitions plugin we use, which relies

|

||||

# on indentation (which is then falsely detected as a code block)

|

||||

"MD046": false

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

MIT License

|

||||

# MIT License

|

||||

|

||||

Copyright (c) 2021 Funky Penguin Limited

|

||||

|

||||

|

||||

44

README.md

44

README.md

@@ -9,6 +9,7 @@

|

||||

[dockerurl]: https://geek-cookbook.funkypenguin.co.nz/ha-docker-swarm/design

|

||||

[k8surl]: https://geek-cookbook.funkypenguin.co.nz/kubernetes/start

|

||||

|

||||

<!-- markdownlint-disable MD033 MD041 -->

|

||||

<div align="center">

|

||||

|

||||

[][cookbookurl]

|

||||

@@ -33,14 +34,14 @@

|

||||

|

||||

# What is this?

|

||||

|

||||

Funky Penguin's "**[Geek Cookbook](https://geek-cookbook.funkypenguin.co.nz)**" is a collection of how-to guides for establishing your own container-based self-hosting platform, using either [Docker Swarm](/ha-docker-swarm/design/) or [Kubernetes](/kubernetes/).

|

||||

Funky Penguin's "**[Geek Cookbook](https://geek-cookbook.funkypenguin.co.nz)**" is a collection of how-to guides for establishing your own container-based self-hosting platform, using either [Docker Swarm](/ha-docker-swarm/design/) or [Kubernetes](/kubernetes/).

|

||||

|

||||

Running such a platform enables you to run self-hosted tools such as [AutoPirate](/recipes/autopirate/) (*Radarr, Sonarr, NZBGet and friends*), [Plex][plex], [NextCloud][nextcloud], and includes elements such as:

|

||||

|

||||

* [Automatic SSL-secured access](/ha-docker-swarm/traefik/) to all services (*with LetsEncrypt*)

|

||||

* [SSO / authentication layer](/ha-docker-swarm/traefik-forward-auth/) to protect unsecured / vulnerable services

|

||||

* [Automated backup](/recipes/elkarbackup/) of configuration and data

|

||||

* [Monitoring and metrics](/recipes/swarmprom/) collection, graphing and alerting

|

||||

- [Automatic SSL-secured access](/ha-docker-swarm/traefik/) to all services (*with LetsEncrypt*)

|

||||

- [SSO / authentication layer](/ha-docker-swarm/traefik-forward-auth/) to protect unsecured / vulnerable services

|

||||

- [Automated backup](/recipes/elkarbackup/) of configuration and data

|

||||

- [Monitoring and metrics](/recipes/swarmprom/) collection, graphing and alerting

|

||||

|

||||

Recent updates and additions are posted on the [CHANGELOG](/CHANGELOG/), and there's a friendly community of like-minded geeks in the [Discord server](http://chat.funkypenguin.co.nz).

|

||||

|

||||

@@ -68,41 +69,40 @@ I want your [support][github_sponsor], either in the [financial][github_sponsor]

|

||||

|

||||

### Get in touch 👋

|

||||

|

||||

* Come and say hi to me and the friendly geeks in the [Discord][discord] chat or the [Discourse][discourse] forums - say hi, ask a question, or suggest a new recipe!

|

||||

* Tweet me up, I'm [@funkypenguin][twitter]! 🐦

|

||||

* [Contact me][contact] by a variety of channels

|

||||

- Come and say hi to me and the friendly geeks in the [Discord][discord] chat or the [Discourse][discourse] forums - say hi, ask a question, or suggest a new recipe!

|

||||

- Tweet me up, I'm [@funkypenguin][twitter]! 🐦

|

||||

- [Contact me][contact] by a variety of channels

|

||||

|

||||

### Buy my book 📖

|

||||

|

||||

I'm also publishing the Geek Cookbook as a formal eBook (*PDF, mobi, epub*), on Leanpub (https://leanpub.com/geek-cookbook). Buy it for as little as $5 (_which is really just a token gesture of support, since all the content is available online anyway!_) or pay what you think it's worth!

|

||||

I'm also publishing the Geek Cookbook as a formal eBook (*PDF, mobi, epub*), on Leanpub (<https://leanpub.com/geek-cookbook>). Buy it for as little as $5 (_which is really just a token gesture of support, since all the content is available online anyway!_) or pay what you think it's worth!

|

||||

|

||||

### [Sponsor][github_sponsor] / [Patronize][patreon] me ❤️

|

||||

|

||||

The best way to support this work is to become a [GitHub Sponsor](https://github.com/sponsors/funkypenguin) / [Patreon patron][patreon] (_for as little as $1/month!_) - You get :

|

||||

|

||||

* warm fuzzies,

|

||||

* access to the pre-mix repo,

|

||||

* an anonymous plug you can pull at any time,

|

||||

* and a bunch more loot based on tier

|

||||

- warm fuzzies,

|

||||

- access to the pre-mix repo,

|

||||

- an anonymous plug you can pull at any time,

|

||||

- and a bunch more loot based on tier

|

||||

|

||||

.. and I get some pocket money every month to buy wine, cheese, and cryptocurrency! 🍷 💰

|

||||

|

||||

Impulsively **[click here (NOW quick do it!)][github_sponsor]** to [sponsor me][github_sponsor] via GitHub, or [patronize me via Patreon][patreon]!

|

||||

|

||||

|

||||

### Work with me 🤝

|

||||

|

||||

Need some Cloud / Microservices / DevOps / Infrastructure design work done? I'm a full-time [AWS Certified Solution Architect (Professional)][aws_cert], a [CNCF-Certified Kubernetes Administrator](https://www.youracclaim.com/badges/cd307d51-544b-4bc6-97b0-9015e40df40d/public_url) and [Application Developer](https://www.youracclaim.com/badges/9ed9280a-fb92-46ca-b307-8f74a2cccf1d/public_url) - this stuff is my bread and butter! :bread: :fork_and_knife: [Get in touch][contact], and let's talk business!

|

||||

|

||||

[plex]: https://www.plex.tv/

|

||||

[plex]: https://www.plex.tv/

|

||||

[nextcloud]: https://nextcloud.com/

|

||||

[wordpress]: https://wordpress.org/

|

||||

[ghost]: https://ghost.io/

|

||||

[wordpress]: https://wordpress.org/

|

||||

[ghost]: https://ghost.io/

|

||||

[discord]: http://chat.funkypenguin.co.nz

|

||||

[patreon]: https://www.patreon.com/bePatron?u=6982506

|

||||

[patreon]: https://www.patreon.com/bePatron?u=6982506

|

||||

[github_sponsor]: https://github.com/sponsors/funkypenguin

|

||||

[github]: https://github.com/sponsors/funkypenguin

|

||||

[discourse]: https://discourse.geek-kitchen.funkypenguin.co.nz/

|

||||

[twitter]: https://twitter.com/funkypenguin

|

||||

[contact]: https://www.funkypenguin.co.nz

|

||||

[aws_cert]: https://www.youracclaim.com/badges/a0c4a196-55ab-4472-b46b-b610b44dc00f/public_url

|

||||

[discourse]: https://discourse.geek-kitchen.funkypenguin.co.nz/

|

||||

[twitter]: https://twitter.com/funkypenguin

|

||||

[contact]: https://www.funkypenguin.co.nz

|

||||

[aws_cert]: https://www.youracclaim.com/badges/a0c4a196-55ab-4472-b46b-b610b44dc00f/public_url

|

||||

|

||||

@@ -28,8 +28,8 @@ Recipe | Description

|

||||

|

||||

Also available via:

|

||||

|

||||

* Mastodon: https://mastodon.social/@geekcookbook_changes

|

||||

* RSS: https://mastodon.social/@geekcookbook_changes.rss

|

||||

* Mastodon: <https://mastodon.social/@geekcookbook_changes>

|

||||

* RSS: <https://mastodon.social/@geekcookbook_changes.rss>

|

||||

* The #changelog channel in our [Discord server](http://chat.funkypenguin.co.nz)

|

||||

|

||||

--8<-- "common-links.md"

|

||||

--8<-- "common-links.md"

|

||||

|

||||

@@ -8,4 +8,4 @@

|

||||

|

||||

## Conventions

|

||||

|

||||

1. When creating swarm networks, we always explicitly set the subnet in the overlay network, to avoid potential conflicts (_which docker won't prevent, but which will generate errors_) (https://github.com/moby/moby/issues/26912)

|

||||

1. When creating swarm networks, we always explicitly set the subnet in the overlay network, to avoid potential conflicts (_which docker won't prevent, but which will generate errors_) (<https://github.com/moby/moby/issues/26912>)

|

||||

|

||||

@@ -126,7 +126,7 @@ the community.

|

||||

|

||||

This Code of Conduct is adapted from the [Contributor Covenant][homepage],

|

||||

version 2.0, available at

|

||||

https://www.contributor-covenant.org/version/2/0/code_of_conduct.html.

|

||||

<https://www.contributor-covenant.org/version/2/0/code_of_conduct.html>.

|

||||

|

||||

Community Impact Guidelines were inspired by [Mozilla's code of conduct

|

||||

enforcement ladder](https://github.com/mozilla/diversity).

|

||||

@@ -134,5 +134,5 @@ enforcement ladder](https://github.com/mozilla/diversity).

|

||||

[homepage]: https://www.contributor-covenant.org

|

||||

|

||||

For answers to common questions about this code of conduct, see the FAQ at

|

||||

https://www.contributor-covenant.org/faq. Translations are available at

|

||||

https://www.contributor-covenant.org/translations.

|

||||

<https://www.contributor-covenant.org/faq>. Translations are available at

|

||||

<https://www.contributor-covenant.org/translations>.

|

||||

|

||||

@@ -15,7 +15,7 @@ Sponsor [your chef](https://github.com/sponsors/funkypenguin) :heart:, or [join

|

||||

|

||||

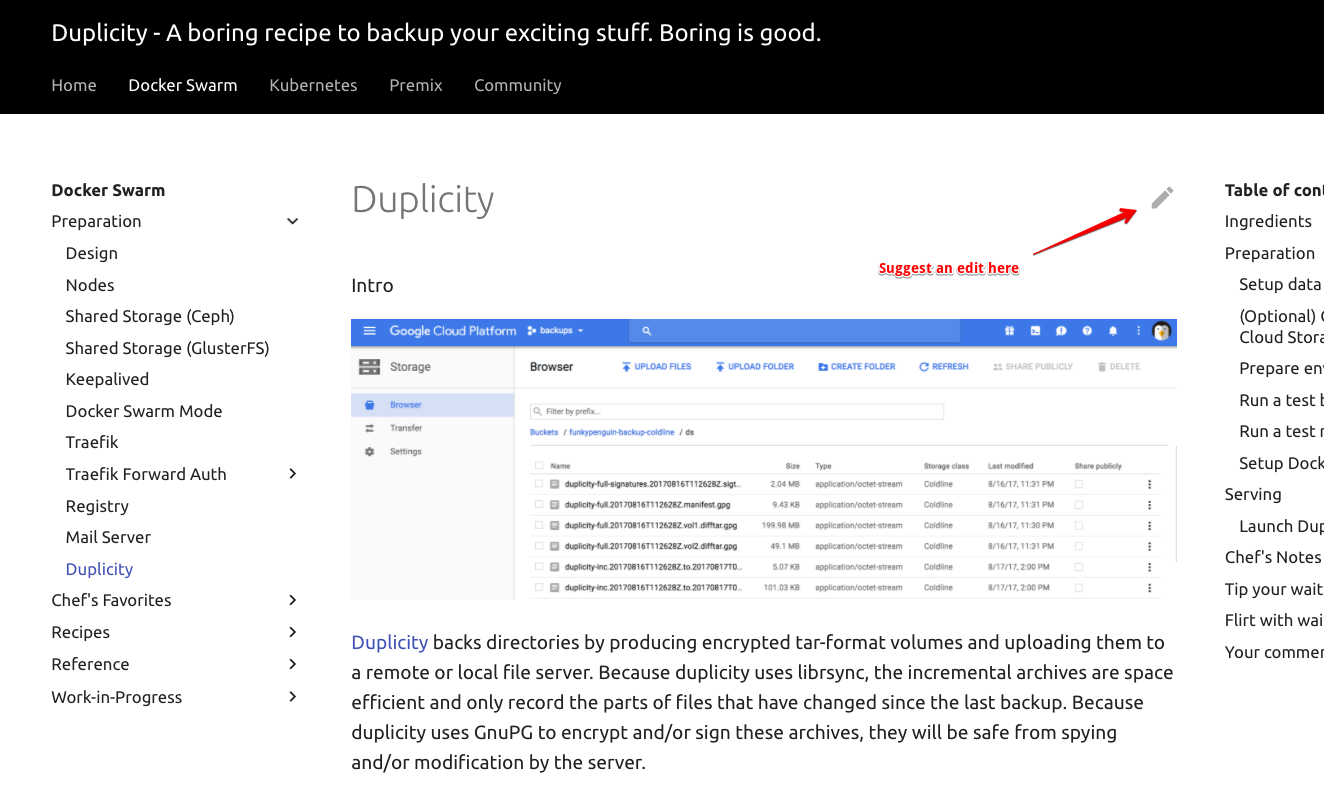

Found a typo / error in a recipe? Each recipe includes a link to make the fix, directly on GitHub:

|

||||

|

||||

|

||||

|

||||

|

||||

Click the link to edit the recipe in Markdown format, and save to create a pull request!

|

||||

|

||||

@@ -37,11 +37,11 @@ GitPod (free up to 50h/month) is by far the smoothest and most slick way to edi

|

||||

|

||||

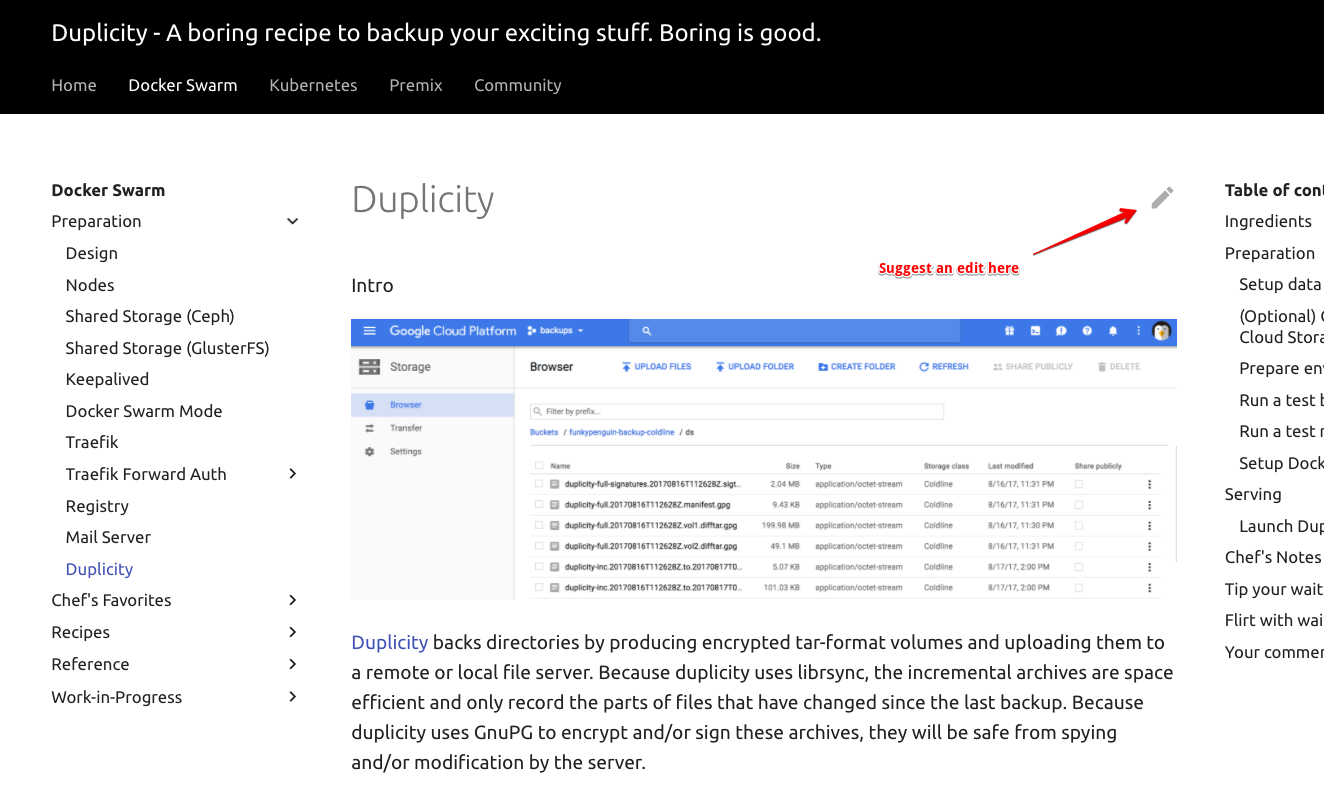

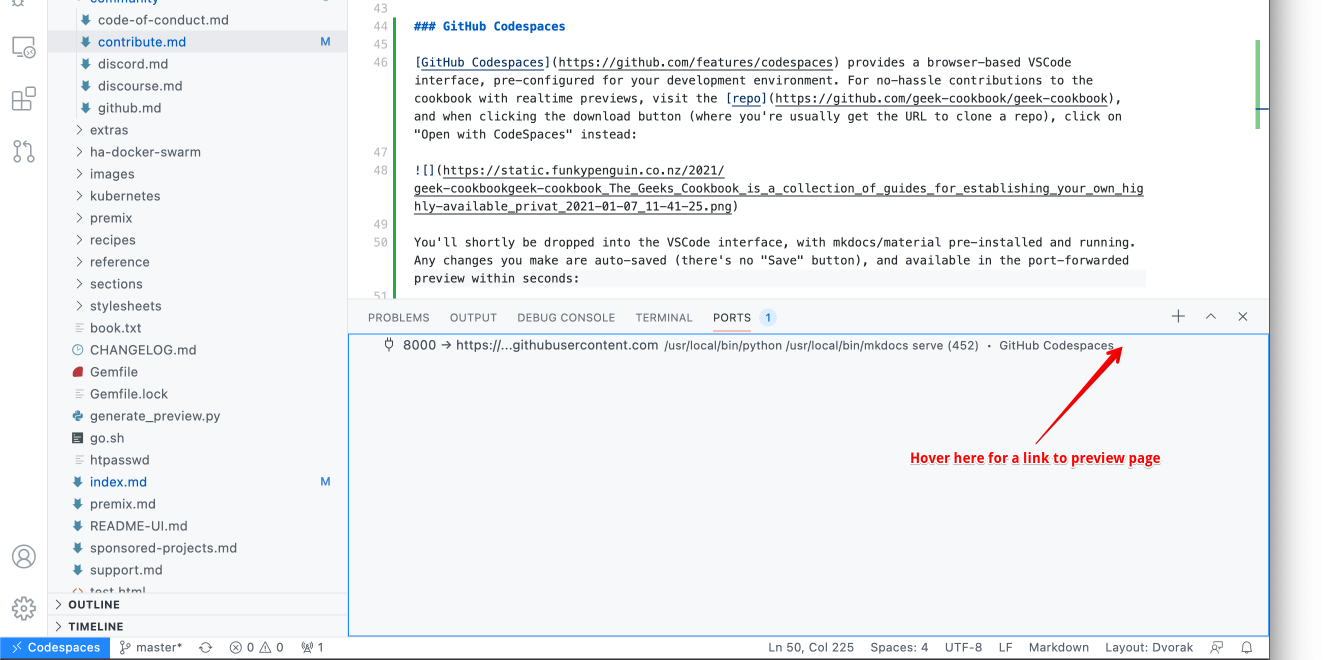

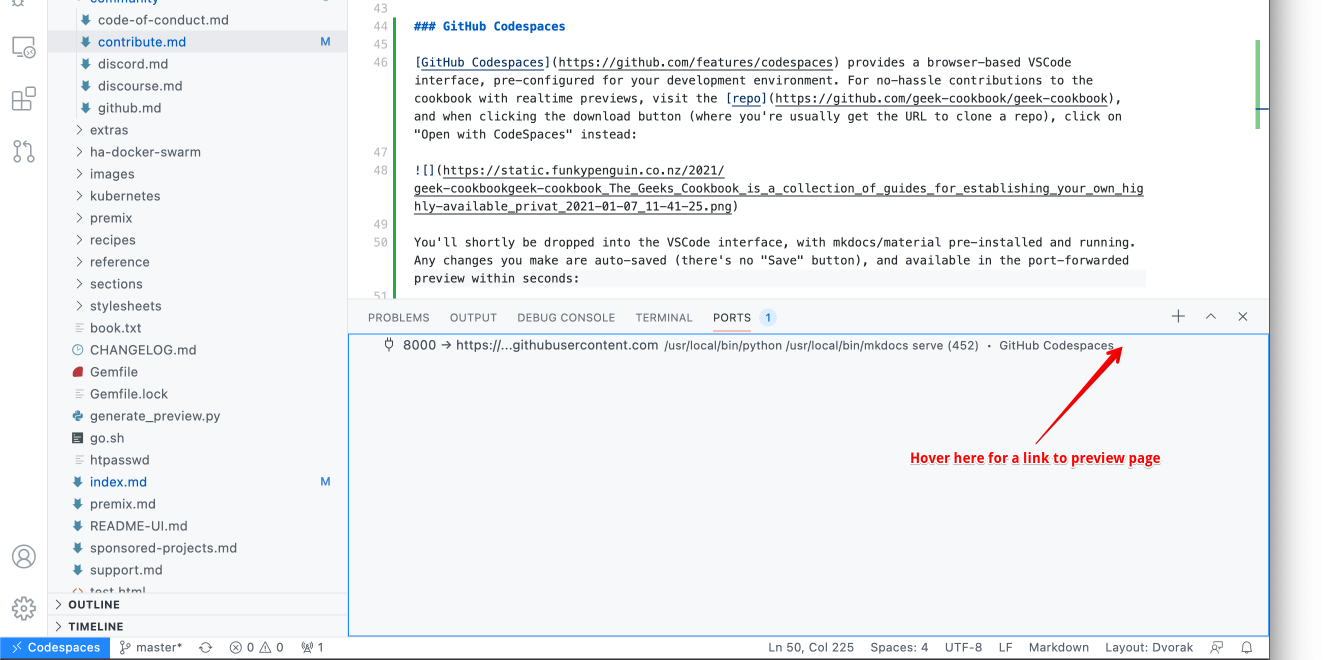

[GitHub Codespaces](https://github.com/features/codespaces) (_no longer free now that it's out of beta_) provides a browser-based VSCode interface, pre-configured for your development environment. For no-hassle contributions to the cookbook with realtime previews, visit the [repo](https://github.com/geek-cookbook/geek-cookbook), and when clicking the download button (*where you're usually get the URL to clone a repo*), click on "**Open with CodeSpaces**" instead:

|

||||

|

||||

|

||||

|

||||

|

||||

You'll shortly be dropped into the VSCode interface, with mkdocs/material pre-installed and running. Any changes you make are auto-saved (*there's no "Save" button*), and available in the port-forwarded preview within seconds:

|

||||

|

||||

|

||||

|

||||

|

||||

Once happy with your changes, drive VSCode as normal to create a branch, commit, push, and create a pull request. You can also abandon the browser window at any time, and return later to pick up where you left off (*even on a different device!*)

|

||||

|

||||

@@ -52,18 +52,15 @@ The process is basically:

|

||||

1. [Fork the repo](https://help.github.com/en/github/getting-started-with-github/fork-a-repo)

|

||||

2. Clone your forked repo locally

|

||||

3. Make a new branch for your recipe (*not strictly necessary, but it helps to differentiate multiple in-flight recipes*)

|

||||

4. Create your new recipe as a markdown file within the existing structure of the [manuscript folder](https://github.com/geek-cookbook/geek-cookbook/tree/master/manuscript)

|

||||

4. Create your new recipe as a markdown file within the existing structure of the [manuscript folder](https://github.com/geek-cookbook/geek-cookbook/tree/master/manuscript)

|

||||

5. Add your recipe to the navigation by editing [mkdocs.yml](https://github.com/geek-cookbook/geek-cookbook/blob/master/mkdocs.yml#L32)

|

||||

6. Test locally by running `./scripts/serve.sh` in the repo folder (*this launches a preview in Docker*), and navigating to http://localhost:8123

|

||||

6. Test locally by running `./scripts/serve.sh` in the repo folder (*this launches a preview in Docker*), and navigating to <http://localhost:8123>

|

||||

7. Rinse and repeat until you're ready to submit a PR

|

||||

8. Create a pull request via the GitHub UI

|

||||

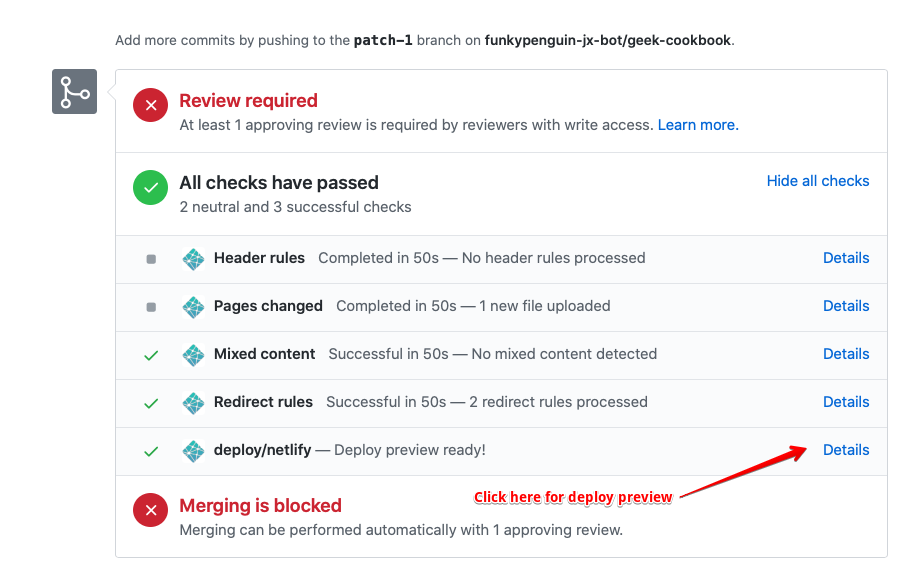

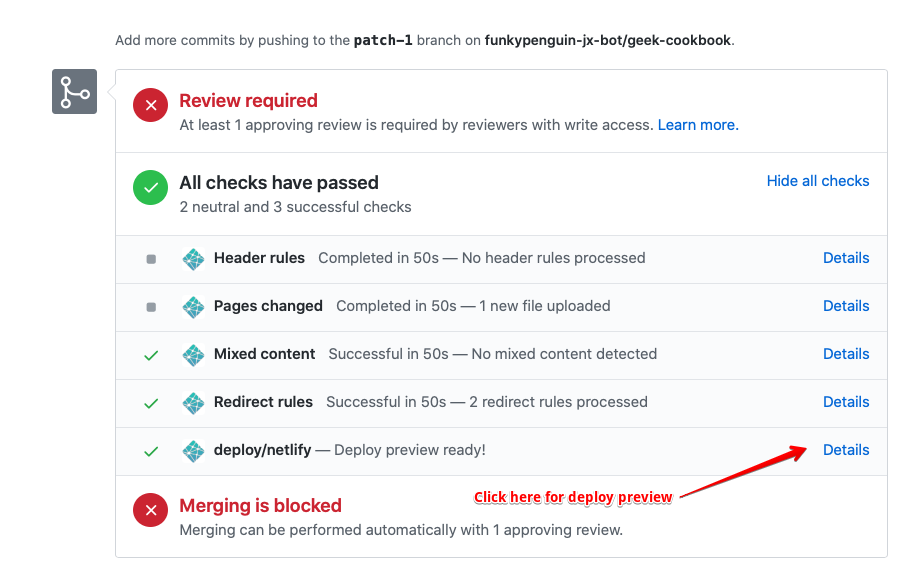

9. The pull request will trigger the creation of a preview environment, as illustrated below. Use the deploy preview to confirm that your recipe is as tasty as possible!

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Contributing skillz 💪

|

||||

|

||||

Got mad skillz, but neither the time nor inclination for recipe-cooking? [Scan the GitHub contributions page](https://github.com/geek-cookbook/geek-cookbook/contribute), [Discussions](https://github.com/geek-cookbook/geek-cookbook/discussions), or jump into [Discord](/community/discord/) or [Discourse](/community/discourse/), and help your fellow geeks with their questions, or just hang out bump up our member count!

|

||||

|

||||

|

||||

@@ -15,8 +15,7 @@ Yeah, I know. I also thought Discord was just for the gamer kids, but it turns o

|

||||

|

||||

1. Create [an account](https://discordapp.com)

|

||||

2. [Join the geek party](http://chat.funkypenguin.co.nz)!

|

||||

|

||||

|

||||

<!-- markdownlint-disable MD033 -->

|

||||

<iframe src="https://discordapp.com/widget?id=396055506072109067&theme=dark" width="350" height="400" allowtransparency="true" frameborder="0"></iframe>

|

||||

|

||||

## Code of Conduct

|

||||

@@ -25,7 +24,7 @@ With the goal of creating a safe and inclusive community, we've adopted the [Con

|

||||

|

||||

### Reporting abuse

|

||||

|

||||

To report a violation of our code of conduct in our Discord server, type `!report <thing to report>` in any channel.

|

||||

To report a violation of our code of conduct in our Discord server, type `!report <thing to report>` in any channel.

|

||||

|

||||

Your report message will immediately be deleted from the channel, and an alert raised to moderators, who will address the issue as detailed in the [enforcement guidelines](/community/code-of-conduct/#enforcement-guidelines).

|

||||

|

||||

@@ -41,7 +40,7 @@ Your report message will immediately be deleted from the channel, and an alert r

|

||||

| #premix-updates | Updates on all pushes to the master branch of the premix |

|

||||

| #discourse-updates | Updates to Discourse topics |

|

||||

|

||||

### 💬 Discussion

|

||||

### 💬 Discussion

|

||||

|

||||

| Channel Name | Channel Use |

|

||||

|----------------|----------------------------------------------------------|

|

||||

@@ -55,22 +54,20 @@ Your report message will immediately be deleted from the channel, and an alert r

|

||||

| #advertisements | In here you can advertise your stream, services or websites, at a limit of 2 posts per day |

|

||||

| #dev | Used for collaboratio around current development. |

|

||||

|

||||

|

||||

### Suggestions

|

||||

### Suggestions

|

||||

|

||||

| Channel Name | Channel Use |

|

||||

|--------------|-------------------------------------|

|

||||

| #in-flight | A list of all suggestions in-flight |

|

||||

| #completed | A list of completed suggestions |

|

||||

|

||||

### Music

|

||||

### Music

|

||||

|

||||

| Channel Name | Channel Use |

|

||||

|------------------|-----------------------------------|

|

||||

| #music | DJs go here to control music |

|

||||

| #listen-to-music | Jump in here to rock out to music |

|

||||

|

||||

|

||||

## How to get help.

|

||||

|

||||

If you need assistance at any time there are a few commands that you can run in order to get help.

|

||||

@@ -79,12 +76,11 @@ If you need assistance at any time there are a few commands that you can run in

|

||||

|

||||

`!faq` Shows frequently asked questions.

|

||||

|

||||

|

||||

## Spread the love (inviting others)

|

||||

|

||||

Invite your co-geeks to Discord by:

|

||||

|

||||

1. Sending them a link to http://chat.funkypenguin.co.nz, or

|

||||

1. Sending them a link to <http://chat.funkypenguin.co.nz>, or

|

||||

2. Right-click on the Discord server name and click "Invite People"

|

||||

|

||||

## Formatting your message

|

||||

@@ -100,8 +96,3 @@ Discord supports minimal message formatting using [markdown](https://support.dis

|

||||

2. Find the #in-flight channel (*also under **Suggestions***), and confirm that your suggestion isn't already in-flight (*but not completed yet*)

|

||||

3. In any channel, type `!suggest [your suggestion goes here]`. A post will be created in #in-flight for other users to vote on your suggestion. Suggestions change color as more users vote on them.

|

||||

4. When your suggestion is completed (*or a decision has been made*), you'll receive a DM from carl-bot

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -1,4 +1,3 @@

|

||||

# Discourse

|

||||

|

||||

You've found an intentionally un-linked page! This page is under construction, and will be up shortly. In the meantime, head to https://discourse.geek-kitchen.funkypenguin.co.nz!

|

||||

|

||||

You've found an intentionally un-linked page! This page is under construction, and will be up shortly. In the meantime, head to <https://discourse.geek-kitchen.funkypenguin.co.nz>!

|

||||

|

||||

@@ -1,4 +1,3 @@

|

||||

# GitHub

|

||||

|

||||

You've found an intentionally un-linked page! This page is under construction, and will be up shortly. In the meantime, head to https://github.com/geek-cookbook/geek-cookbook!

|

||||

|

||||

You've found an intentionally un-linked page! This page is under construction, and will be up shortly. In the meantime, head to <https://github.com/geek-cookbook/geek-cookbook>!

|

||||

|

||||

@@ -10,7 +10,7 @@ In the design described below, our "private cloud" platform is:

|

||||

|

||||

## Design Decisions

|

||||

|

||||

**Where possible, services will be highly available.**

|

||||

### Where possible, services will be highly available.**

|

||||

|

||||

This means that:

|

||||

|

||||

@@ -39,8 +39,7 @@ Under this design, the only inbound connections we're permitting to our docker s

|

||||

### Authentication

|

||||

|

||||

* Where the hosted application provides a trusted level of authentication (*i.e., [NextCloud](/recipes/nextcloud/)*), or where the application requires public exposure (*i.e. [Privatebin](/recipes/privatebin/)*), no additional layer of authentication will be required.

|

||||

* Where the hosted application provides inadequate (*i.e. [NZBGet](/recipes/autopirate/nzbget/)*) or no authentication (*i.e. [Gollum](/recipes/gollum/)*), a further authentication against an OAuth provider will be required.

|

||||

|

||||

* Where the hosted application provides inadequate (*i.e. [NZBGet](/recipes/autopirate/nzbget/)*) or no authentication (*i.e. [Gollum](/recipes/gollum/)*), a further authentication against an OAuth provider will be required.

|

||||

|

||||

## High availability

|

||||

|

||||

@@ -78,7 +77,6 @@ When the failed (*or upgraded*) host is restored to service, the following is il

|

||||

* Existing containers which were migrated off the node are not migrated backend

|

||||

* Keepalived VIP regains full redundancy

|

||||

|

||||

|

||||

|

||||

|

||||

### Total cluster failure

|

||||

@@ -91,4 +89,4 @@ In summary, although I suffered an **unplanned power outage to all of my infrast

|

||||

|

||||

[^1]: Since there's no impact to availability, I can fix (or just reinstall) the failed node whenever convenient.

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

--8<-- "recipe-footer.md"

|

||||

|

||||

@@ -6,7 +6,7 @@ For truly highly-available services with Docker containers, we need an orchestra

|

||||

|

||||

!!! summary

|

||||

Existing

|

||||

|

||||

|

||||

* [X] 3 x nodes (*bare-metal or VMs*), each with:

|

||||

* A mainstream Linux OS (*tested on either [CentOS](https://www.centos.org) 7+ or [Ubuntu](http://releases.ubuntu.com) 16.04+*)

|

||||

* At least 2GB RAM

|

||||

@@ -19,19 +19,20 @@ For truly highly-available services with Docker containers, we need an orchestra

|

||||

|

||||

Add some handy bash auto-completion for docker. Without this, you'll get annoyed that you can't autocomplete ```docker stack deploy <blah> -c <blah.yml>``` commands.

|

||||

|

||||

```

|

||||

```bash

|

||||

cd /etc/bash_completion.d/

|

||||

curl -O https://raw.githubusercontent.com/docker/cli/b75596e1e4d5295ac69b9934d1bd8aff691a0de8/contrib/completion/bash/docker

|

||||

```

|

||||

|

||||

Install some useful bash aliases on each host

|

||||

```

|

||||

|

||||

```bash

|

||||

cd ~

|

||||

curl -O https://raw.githubusercontent.com/funkypenguin/geek-cookbook/master/examples/scripts/gcb-aliases.sh

|

||||

echo 'source ~/gcb-aliases.sh' >> ~/.bash_profile

|

||||

```

|

||||

|

||||

## Serving

|

||||

## Serving

|

||||

|

||||

### Release the swarm!

|

||||

|

||||

@@ -39,7 +40,7 @@ Now, to launch a swarm. Pick a target node, and run `docker swarm init`

|

||||

|

||||

Yeah, that was it. Seriously. Now we have a 1-node swarm.

|

||||

|

||||

```

|

||||

```bash

|

||||

[root@ds1 ~]# docker swarm init

|

||||

Swarm initialized: current node (b54vls3wf8xztwfz79nlkivt8) is now a manager.

|

||||

|

||||

@@ -56,7 +57,7 @@ To add a manager to this swarm, run 'docker swarm join-token manager' and follow

|

||||

|

||||

Run `docker node ls` to confirm that you have a 1-node swarm:

|

||||

|

||||

```

|

||||

```bash

|

||||

[root@ds1 ~]# docker node ls

|

||||

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

|

||||

b54vls3wf8xztwfz79nlkivt8 * ds1.funkypenguin.co.nz Ready Active Leader

|

||||

@@ -67,7 +68,7 @@ Note that when you run `docker swarm init` above, the CLI output gives youe a co

|

||||

|

||||

On the first swarm node, generate the necessary token to join another manager by running ```docker swarm join-token manager```:

|

||||

|

||||

```

|

||||

```bash

|

||||

[root@ds1 ~]# docker swarm join-token manager

|

||||

To add a manager to this swarm, run the following command:

|

||||

|

||||

@@ -80,8 +81,7 @@ To add a manager to this swarm, run the following command:

|

||||

|

||||

Run the command provided on your other nodes to join them to the swarm as managers. After addition of a node, the output of ```docker node ls``` (on either host) should reflect all the nodes:

|

||||

|

||||

|

||||

```

|

||||

```bash

|

||||

[root@ds2 davidy]# docker node ls

|

||||

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

|

||||

b54vls3wf8xztwfz79nlkivt8 ds1.funkypenguin.co.nz Ready Active Leader

|

||||

@@ -97,14 +97,14 @@ To address this, we'll run the "[meltwater/docker-cleanup](https://github.com/me

|

||||

|

||||

First, create `docker-cleanup.env` (_mine is under `/var/data/config/docker-cleanup`_), and exclude container images we **know** we want to keep:

|

||||

|

||||

```

|

||||

```bash

|

||||

KEEP_IMAGES=traefik,keepalived,docker-mailserver

|

||||

DEBUG=1

|

||||

```

|

||||

|

||||

Then create a docker-compose.yml as follows:

|

||||

|

||||

```

|

||||

```yaml

|

||||

version: "3"

|

||||

|

||||

services:

|

||||

@@ -137,7 +137,7 @@ If your swarm runs for a long time, you might find yourself running older contai

|

||||

|

||||

Create `/var/data/config/shepherd/shepherd.env` as follows:

|

||||

|

||||

```

|

||||

```bash

|

||||

# Don't auto-update Plex or Emby (or Jellyfin), I might be watching a movie! (Customize this for the containers you _don't_ want to auto-update)

|

||||

BLACKLIST_SERVICES="plex_plex emby_emby jellyfin_jellyfin"

|

||||

# Run every 24 hours. Note that SLEEP_TIME appears to be in seconds.

|

||||

@@ -146,7 +146,7 @@ SLEEP_TIME=86400

|

||||

|

||||

Then create /var/data/config/shepherd/shepherd.yml as follows:

|

||||

|

||||

```

|

||||

```yaml

|

||||

version: "3"

|

||||

|

||||

services:

|

||||

@@ -175,4 +175,4 @@ What have we achieved?

|

||||

|

||||

* [X] [Docker swarm cluster](/ha-docker-swarm/design/)

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

--8<-- "recipe-footer.md"

|

||||

|

||||

@@ -34,7 +34,7 @@ On all nodes which will participate in keepalived, we need the "ip_vs" kernel mo

|

||||

|

||||

Set this up once-off for both the primary and secondary nodes, by running:

|

||||

|

||||

```

|

||||

```bash

|

||||

echo "modprobe ip_vs" >> /etc/modules

|

||||

modprobe ip_vs

|

||||

```

|

||||

@@ -43,14 +43,13 @@ modprobe ip_vs

|

||||

|

||||

Assuming your IPs are as follows:

|

||||

|

||||

```

|

||||

* 192.168.4.1 : Primary

|

||||

* 192.168.4.2 : Secondary

|

||||

* 192.168.4.3 : Virtual

|

||||

```

|

||||

- 192.168.4.1 : Primary

|

||||

- 192.168.4.2 : Secondary

|

||||

- 192.168.4.3 : Virtual

|

||||

|

||||

Run the following on the primary

|

||||

```

|

||||

|

||||

```bash

|

||||

docker run -d --name keepalived --restart=always \

|

||||

--cap-add=NET_ADMIN --cap-add=NET_BROADCAST --cap-add=NET_RAW --net=host \

|

||||

-e KEEPALIVED_UNICAST_PEERS="#PYTHON2BASH:['192.168.4.1', '192.168.4.2']" \

|

||||

@@ -60,7 +59,8 @@ docker run -d --name keepalived --restart=always \

|

||||

```

|

||||

|

||||

And on the secondary[^2]:

|

||||

```

|

||||

|

||||

```bash

|

||||

docker run -d --name keepalived --restart=always \

|

||||

--cap-add=NET_ADMIN --cap-add=NET_BROADCAST --cap-add=NET_RAW --net=host \

|

||||

-e KEEPALIVED_UNICAST_PEERS="#PYTHON2BASH:['192.168.4.1', '192.168.4.2']" \

|

||||

@@ -73,7 +73,6 @@ docker run -d --name keepalived --restart=always \

|

||||

|

||||

That's it. Each node will talk to the other via unicast (*no need to un-firewall multicast addresses*), and the node with the highest priority gets to be the master. When ingress traffic arrives on the master node via the VIP, docker's routing mesh will deliver it to the appropriate docker node.

|

||||

|

||||

|

||||

## Summary

|

||||

|

||||

What have we achieved?

|

||||

@@ -88,4 +87,4 @@ What have we achieved?

|

||||

[^1]: Some hosting platforms (*OpenStack, for one*) won't allow you to simply "claim" a virtual IP. Each node is only able to receive traffic targetted to its unique IP, unless certain security controls are disabled by the cloud administrator. In this case, keepalived is not the right solution, and a platform-specific load-balancing solution should be used. In OpenStack, this is Neutron's "Load Balancer As A Service" (LBAAS) component. AWS, GCP and Azure would likely include similar protections.

|

||||

[^2]: More than 2 nodes can participate in keepalived. Simply ensure that each node has the appropriate priority set, and the node with the highest priority will become the master.

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

--8<-- "recipe-footer.md"

|

||||

|

||||

@@ -16,7 +16,6 @@ Let's start building our cluster. You can use either bare-metal machines or virt

|

||||

* At least 20GB disk space (_but it'll be tight_)

|

||||

* [ ] Connectivity to each other within the same subnet, and on a low-latency link (_i.e., no WAN links_)

|

||||

|

||||

|

||||

## Preparation

|

||||

|

||||

### Permit connectivity

|

||||

@@ -27,7 +26,7 @@ Most modern Linux distributions include firewall rules which only only permit mi

|

||||

|

||||

Add something like this to `/etc/sysconfig/iptables`:

|

||||

|

||||

```

|

||||

```bash

|

||||

# Allow all inter-node communication

|

||||

-A INPUT -s 192.168.31.0/24 -j ACCEPT

|

||||

```

|

||||

@@ -38,7 +37,7 @@ And restart iptables with ```systemctl restart iptables```

|

||||

|

||||

Install the (*non-default*) persistent iptables tools, by running `apt-get install iptables-persistent`, establishing some default rules (*dkpg will prompt you to save current ruleset*), and then add something like this to `/etc/iptables/rules.v4`:

|

||||

|

||||

```

|

||||

```bash

|

||||

# Allow all inter-node communication

|

||||

-A INPUT -s 192.168.31.0/24 -j ACCEPT

|

||||

```

|

||||

@@ -49,17 +48,15 @@ And refresh your running iptables rules with `iptables-restore < /etc/iptables/r

|

||||

|

||||

Depending on your hosting environment, you may have DNS automatically setup for your VMs. If not, it's useful to set up static entries in /etc/hosts for the nodes. For example, I setup the following:

|

||||

|

||||

```

|

||||

192.168.31.11 ds1 ds1.funkypenguin.co.nz

|

||||

192.168.31.12 ds2 ds2.funkypenguin.co.nz

|

||||

192.168.31.13 ds3 ds3.funkypenguin.co.nz

|

||||

```

|

||||

- 192.168.31.11 ds1 ds1.funkypenguin.co.nz

|

||||

- 192.168.31.12 ds2 ds2.funkypenguin.co.nz

|

||||

- 192.168.31.13 ds3 ds3.funkypenguin.co.nz

|

||||

|

||||

### Set timezone

|

||||

|

||||

Set your local timezone, by running:

|

||||

|

||||

```

|

||||

```bash

|

||||

ln -sf /usr/share/zoneinfo/<your timezone> /etc/localtime

|

||||

```

|

||||

|

||||

@@ -69,11 +66,11 @@ After completing the above, you should have:

|

||||

|

||||

!!! summary "Summary"

|

||||

Deployed in this recipe:

|

||||

|

||||

|

||||

* [X] 3 x nodes (*bare-metal or VMs*), each with:

|

||||

* A mainstream Linux OS (*tested on either [CentOS](https://www.centos.org) 7+ or [Ubuntu](http://releases.ubuntu.com) 16.04+*)

|

||||

* At least 2GB RAM

|

||||

* At least 20GB disk space (_but it'll be tight_)

|

||||

* [X] Connectivity to each other within the same subnet, and on a low-latency link (_i.e., no WAN links_)

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

--8<-- "recipe-footer.md"

|

||||

|

||||

@@ -18,7 +18,7 @@ The registry mirror runs as a swarm stack, using a simple docker-compose.yml. Cu

|

||||

|

||||

Create /var/data/config/registry/registry.yml as follows:

|

||||

|

||||

```

|

||||

```yaml

|

||||

version: "3"

|

||||

|

||||

services:

|

||||

@@ -48,7 +48,7 @@ We create this registry without consideration for SSL, which will fail if we att

|

||||

|

||||

Create /var/data/registry/registry-mirror-config.yml as follows:

|

||||

|

||||

```

|

||||

```yaml

|

||||

version: 0.1

|

||||

log:

|

||||

fields:

|

||||

@@ -83,7 +83,7 @@ Launch the registry stack by running `docker stack deploy registry -c <path-to-d

|

||||

|

||||

To tell docker to use the registry mirror, and (_while we're here_) in order to be able to watch the logs of any service from any manager node (_an experimental feature in the current Atomic docker build_), edit **/etc/docker-latest/daemon.json** on each node, and change from:

|

||||

|

||||

```

|

||||

```json

|

||||

{

|

||||

"log-driver": "journald",

|

||||

"signature-verification": false

|

||||

@@ -92,7 +92,7 @@ To tell docker to use the registry mirror, and (_while we're here_) in order to

|

||||

|

||||

To:

|

||||

|

||||

```

|

||||

```json

|

||||

{

|

||||

"log-driver": "journald",

|

||||

"signature-verification": false,

|

||||

@@ -103,11 +103,11 @@ To:

|

||||

|

||||

Then restart docker by running:

|

||||

|

||||

```

|

||||

```bash

|

||||

systemctl restart docker-latest

|

||||

```

|

||||

|

||||

!!! tip ""

|

||||

Note the extra comma required after "false" above

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

--8<-- "recipe-footer.md"

|

||||

|

||||

@@ -29,7 +29,7 @@ One of your nodes will become the cephadm "master" node. Although all nodes will

|

||||

|

||||

Run the following on the ==master== node:

|

||||

|

||||

```

|

||||

```bash

|

||||

MYIP=`ip route get 1.1.1.1 | grep -oP 'src \K\S+'`

|

||||

curl --silent --remote-name --location https://github.com/ceph/ceph/raw/octopus/src/cephadm/cephadm

|

||||

chmod +x cephadm

|

||||

@@ -44,7 +44,7 @@ The process takes about 30 seconds, after which, you'll have a MVC (*Minimum Via

|

||||

??? "Example output from a fresh cephadm bootstrap"

|

||||

```

|

||||

root@raphael:~# MYIP=`ip route get 1.1.1.1 | grep -oP 'src \K\S+'`

|

||||

root@raphael:~# curl --silent --remote-name --location https://github.com/ceph/ceph/raw/octopus/src/cephadm/cephadm

|

||||

root@raphael:~# curl --silent --remote-name --location <https://github.com/ceph/ceph/raw/octopus/src/cephadm/cephadm>

|

||||

|

||||

root@raphael:~# chmod +x cephadm

|

||||

root@raphael:~# mkdir -p /etc/ceph

|

||||

@@ -130,7 +130,6 @@ The process takes about 30 seconds, after which, you'll have a MVC (*Minimum Via

|

||||

root@raphael:~#

|

||||

```

|

||||

|

||||

|

||||

### Prepare other nodes

|

||||

|

||||

It's now necessary to tranfer the following files to your ==other== nodes, so that cephadm can add them to your cluster, and so that they'll be able to mount the cephfs when we're done:

|

||||

@@ -141,11 +140,10 @@ It's now necessary to tranfer the following files to your ==other== nodes, so th

|

||||

| `/etc/ceph/ceph.client.admin.keyring` | `/etc/ceph/ceph.client.admin.keyring` |

|

||||

| `/etc/ceph/ceph.pub` | `/root/.ssh/authorized_keys` (append to anything existing) |

|

||||

|

||||

|

||||

Back on the ==master== node, run `ceph orch host add <node-name>` once for each other node you want to join to the cluster. You can validate the results by running `ceph orch host ls`

|

||||

|

||||

!!! question "Should we be concerned about giving cephadm using root access over SSH?"

|

||||

Not really. Docker is inherently insecure at the host-level anyway (*think what would happen if you launched a global-mode stack with a malicious container image which mounted `/root/.ssh`*), so worrying about cephadm seems a little barn-door-after-horses-bolted. If you take host-level security seriously, consider switching to [Kubernetes](/kubernetes/) :)

|

||||

Not really. Docker is inherently insecure at the host-level anyway (*think what would happen if you launched a global-mode stack with a malicious container image which mounted `/root/.ssh`*), so worrying about cephadm seems a little barn-door-after-horses-bolted. If you take host-level security seriously, consider switching to [Kubernetes](/kubernetes/) :)

|

||||

|

||||

### Add OSDs

|

||||

|

||||

@@ -161,7 +159,7 @@ You can watch the progress by running `ceph fs ls` (to see the fs is configured)

|

||||

|

||||

On ==every== node, create a mountpoint for the data, by running ```mkdir /var/data```, add an entry to fstab to ensure the volume is auto-mounted on boot, and ensure the volume is actually _mounted_ if there's a network / boot delay getting access to the gluster volume:

|

||||

|

||||

```

|

||||

```bash

|

||||

mkdir /var/data

|

||||

|

||||

MYNODES="<node1>,<node2>,<node3>" # Add your own nodes here, comma-delimited

|

||||

@@ -184,14 +182,13 @@ mount -a

|

||||

mount -a

|

||||

```

|

||||

|

||||

|

||||

## Serving

|

||||

|

||||

### Sprinkle with tools

|

||||

|

||||

Although it's possible to use `cephadm shell` to exec into a container with the necessary ceph tools, it's more convenient to use the native CLI tools. To this end, on each node, run the following, which will install the appropriate apt repository, and install the latest ceph CLI tools:

|

||||

|

||||

```

|

||||

```bash

|

||||

curl -L https://download.ceph.com/keys/release.asc | sudo apt-key add -

|

||||

cephadm add-repo --release octopus

|

||||

cephadm install ceph-common

|

||||

@@ -199,9 +196,9 @@ cephadm install ceph-common

|

||||

|

||||

### Drool over dashboard

|

||||

|

||||

Ceph now includes a comprehensive dashboard, provided by the mgr daemon. The dashboard will be accessible at https://[IP of your ceph master node]:8443, but you'll need to run `ceph dashboard ac-user-create <username> <password> administrator` first, to create an administrator account:

|

||||

Ceph now includes a comprehensive dashboard, provided by the mgr daemon. The dashboard will be accessible at `https://[IP of your ceph master node]:8443`, but you'll need to run `ceph dashboard ac-user-create <username> <password> administrator` first, to create an administrator account:

|

||||

|

||||

```

|

||||

```bash

|

||||

root@raphael:~# ceph dashboard ac-user-create batman supermansucks administrator

|

||||

{"username": "batman", "password": "$2b$12$3HkjY85mav.dq3HHAZiWP.KkMiuoV2TURZFH.6WFfo/BPZCT/0gr.", "roles": ["administrator"], "name": null, "email": null, "lastUpdate": 1590372281, "enabled": true, "pwdExpirationDate": null, "pwdUpdateRequired": false}

|

||||

root@raphael:~#

|

||||

@@ -223,11 +220,7 @@ What have we achieved?

|

||||

Here's a screencast of the playbook in action. I sped up the boring parts, it actually takes ==5 min== (*you can tell by the timestamps on the prompt*):

|

||||

|

||||

|

||||

[patreon]: https://www.patreon.com/bePatron?u=6982506

|

||||

[github_sponsor]: https://github.com/sponsors/funkypenguin

|

||||

[patreon]: <https://www.patreon.com/bePatron?u=6982506>

|

||||

[github_sponsor]: <https://github.com/sponsors/funkypenguin>

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

--8<-- "recipe-footer.md"

|

||||

|

||||

@@ -32,7 +32,7 @@ On each host, run a variation following to create your bricks, adjusted for the

|

||||

|

||||

!!! note "The example below assumes /dev/vdb is dedicated to the gluster volume"

|

||||

|

||||

```

|

||||

```bash

|

||||

(

|

||||

echo o # Create a new empty DOS partition table

|

||||

echo n # Add a new partition

|

||||

@@ -60,7 +60,7 @@ Atomic doesn't include the Gluster server components. This means we'll have to

|

||||

|

||||

Run the following on each host:

|

||||

|

||||

````

|

||||

````bash

|

||||

docker run \

|

||||

-h glusterfs-server \

|

||||

-v /etc/glusterfs:/etc/glusterfs:z \

|

||||

@@ -82,7 +82,7 @@ From the node, run `gluster peer probe <other host>`.

|

||||

|

||||

Example output:

|

||||

|

||||

```

|

||||

```bash

|

||||

[root@glusterfs-server /]# gluster peer probe ds1

|

||||

peer probe: success.

|

||||

[root@glusterfs-server /]#

|

||||

@@ -92,7 +92,7 @@ Run ```gluster peer status``` on both nodes to confirm that they're properly con

|

||||

|

||||

Example output:

|

||||

|

||||

```

|

||||

```bash

|

||||

[root@glusterfs-server /]# gluster peer status

|

||||

Number of Peers: 1

|

||||

|

||||

@@ -108,7 +108,7 @@ Now we create a *replicated volume* out of our individual "bricks".

|

||||

|

||||

Create the gluster volume by running:

|

||||

|

||||

```

|

||||

```bash

|

||||

gluster volume create gv0 replica 2 \

|

||||

server1:/var/no-direct-write-here/brick1 \

|

||||

server2:/var/no-direct-write-here/brick1

|

||||

@@ -116,7 +116,7 @@ gluster volume create gv0 replica 2 \

|

||||

|

||||

Example output:

|

||||

|

||||

```

|

||||

```bash

|

||||

[root@glusterfs-server /]# gluster volume create gv0 replica 2 ds1:/var/no-direct-write-here/brick1/gv0 ds3:/var/no-direct-write-here/brick1/gv0

|

||||

volume create: gv0: success: please start the volume to access data

|

||||

[root@glusterfs-server /]#

|

||||

@@ -124,7 +124,7 @@ volume create: gv0: success: please start the volume to access data

|

||||

|

||||

Start the volume by running ```gluster volume start gv0```

|

||||

|

||||

```

|

||||

```bash

|

||||

[root@glusterfs-server /]# gluster volume start gv0

|

||||

volume start: gv0: success

|

||||

[root@glusterfs-server /]#

|

||||

@@ -138,7 +138,7 @@ From one other host, run ```docker exec -it glusterfs-server bash``` to shell in

|

||||

|

||||

On the host (i.e., outside of the container - type ```exit``` if you're still shelled in), create a mountpoint for the data, by running ```mkdir /var/data```, add an entry to fstab to ensure the volume is auto-mounted on boot, and ensure the volume is actually _mounted_ if there's a network / boot delay getting access to the gluster volume:

|

||||

|

||||

```

|

||||

```bash

|

||||

mkdir /var/data

|

||||

MYHOST=`hostname -s`

|

||||

echo '' >> /etc/fstab >> /etc/fstab

|

||||

@@ -149,7 +149,7 @@ mount -a

|

||||

|

||||

For some reason, my nodes won't auto-mount this volume on boot. I even tried the trickery below, but they stubbornly refuse to automount:

|

||||

|

||||

```

|

||||

```bash

|

||||

echo -e "\n\n# Give GlusterFS 10s to start before \

|

||||

mounting\nsleep 10s && mount -a" >> /etc/rc.local

|

||||

systemctl enable rc-local.service

|

||||

@@ -168,4 +168,4 @@ After completing the above, you should have:

|

||||

1. Migration of shared storage from GlusterFS to Ceph ()[#2](https://gitlab.funkypenguin.co.nz/funkypenguin/geeks-cookbook/issues/2))

|

||||

2. Correct the fact that volumes don't automount on boot ([#3](https://gitlab.funkypenguin.co.nz/funkypenguin/geeks-cookbook/issues/3))

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

--8<-- "recipe-footer.md"

|

||||

|

||||

@@ -29,11 +29,11 @@ Under normal OIDC auth, you have to tell your auth provider which URLs it may re

|

||||

|

||||

[@thomaseddon's traefik-forward-auth](https://github.com/thomseddon/traefik-forward-auth) includes an ingenious mechanism to simulate an "_auth host_" in your OIDC authentication, so that you can protect an unlimited amount of DNS names (_with a common domain suffix_), without having to manually maintain a list.

|

||||

|

||||

#### How does it work?

|

||||

### How does it work?

|

||||

|

||||

Say you're protecting **radarr.example.com**. When you first browse to **https://radarr.example.com**, Traefik forwards your session to traefik-forward-auth, to be authenticated. Traefik-forward-auth redirects you to your OIDC provider's login (_KeyCloak, in this case_), but instructs the OIDC provider to redirect a successfully authenticated session **back** to **https://auth.example.com/_oauth**, rather than to **https://radarr.example.com/_oauth**.

|

||||

Say you're protecting **radarr.example.com**. When you first browse to **<https://radarr.example.com>**, Traefik forwards your session to traefik-forward-auth, to be authenticated. Traefik-forward-auth redirects you to your OIDC provider's login (_KeyCloak, in this case_), but instructs the OIDC provider to redirect a successfully authenticated session **back** to **<https://auth.example.com/_oauth>**, rather than to **<https://radarr.example.com/_oauth>**.

|

||||

|

||||

When you successfully authenticate against the OIDC provider, you are redirected to the "_redirect_uri_" of https://auth.example.com. Again, your request hits Traefik, which forwards the session to traefik-forward-auth, which **knows** that you've just been authenticated (_cookies have a role to play here_). Traefik-forward-auth also knows the URL of your **original** request (_thanks to the X-Forwarded-Whatever header_). Traefik-forward-auth redirects you to your original destination, and everybody is happy.

|

||||

When you successfully authenticate against the OIDC provider, you are redirected to the "_redirect_uri_" of <https://auth.example.com>. Again, your request hits Traefik, which forwards the session to traefik-forward-auth, which **knows** that you've just been authenticated (_cookies have a role to play here_). Traefik-forward-auth also knows the URL of your **original** request (_thanks to the X-Forwarded-Whatever header_). Traefik-forward-auth redirects you to your original destination, and everybody is happy.

|

||||

|

||||

This clever workaround only works under 2 conditions:

|

||||

|

||||

@@ -50,4 +50,4 @@ Traefik Forward Auth needs to authenticate an incoming user against a provider.

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

|

||||

[^1]: Authhost mode is specifically handy for Google authentication, since Google doesn't permit wildcard redirect_uris, like [KeyCloak][keycloak] does.

|

||||

[^1]: Authhost mode is specifically handy for Google authentication, since Google doesn't permit wildcard redirect_uris, like [KeyCloak][keycloak] does.

|

||||

|

||||

@@ -49,7 +49,7 @@ staticPasswords:

|

||||

|

||||

Create `/var/data/config/traefik-forward-auth/traefik-forward-auth.env` as follows:

|

||||

|

||||

```

|

||||

```bash

|

||||

DEFAULT_PROVIDER: oidc

|

||||

PROVIDERS_OIDC_CLIENT_ID: foo # This is the staticClients.id value in config.yml above

|

||||

PROVIDERS_OIDC_CLIENT_SECRET: bar # This is the staticClients.secret value in config.yml above

|

||||

@@ -176,7 +176,7 @@ Once you redeploy traefik-forward-auth with the above, it **should** use dex as

|

||||

|

||||

### Test

|

||||

|

||||

Browse to https://whoami.example.com (_obviously, customized for your domain and having created a DNS record_), and all going according to plan, you'll be redirected to a CoreOS Dex login. Once successfully logged in, you'll be directed to the basic whoami page :thumbsup:

|

||||

Browse to <https://whoami.example.com> (_obviously, customized for your domain and having created a DNS record_), and all going according to plan, you'll be redirected to a CoreOS Dex login. Once successfully logged in, you'll be directed to the basic whoami page :thumbsup:

|

||||

|

||||

### Protect services

|

||||

|

||||

|

||||

@@ -12,9 +12,9 @@ This recipe will illustrate how to point Traefik Forward Auth to Google, confirm

|

||||

|

||||

#### TL;DR

|

||||

|

||||

Log into https://console.developers.google.com/, create a new project then search for and select "**Credentials**" in the search bar.

|

||||

Log into <https://console.developers.google.com/>, create a new project then search for and select "**Credentials**" in the search bar.

|

||||

|

||||

Fill out the "OAuth Consent Screen" tab, and then click, "**Create Credentials**" > "**OAuth client ID**". Select "**Web Application**", fill in the name of your app, skip "**Authorized JavaScript origins**" and fill "**Authorized redirect URIs**" with either all the domains you will allow authentication from, appended with the url-path (*e.g. https://radarr.example.com/_oauth, https://radarr.example.com/_oauth, etc*), or if you don't like frustration, use a "auth host" URL instead, like "*https://auth.example.com/_oauth*" (*see below for details*)

|

||||

Fill out the "OAuth Consent Screen" tab, and then click, "**Create Credentials**" > "**OAuth client ID**". Select "**Web Application**", fill in the name of your app, skip "**Authorized JavaScript origins**" and fill "**Authorized redirect URIs**" with either all the domains you will allow authentication from, appended with the url-path (*e.g. <https://radarr.example.com/_oauth>, <https://radarr.example.com/_oauth>, etc*), or if you don't like frustration, use a "auth host" URL instead, like "*<https://auth.example.com/_oauth>*" (*see below for details*)

|

||||

|

||||

#### Monkey see, monkey do 🙈

|

||||

|

||||

@@ -27,7 +27,7 @@ Here's a [screencast I recorded](https://static.funkypenguin.co.nz/2021/screenca

|

||||

|

||||

Create `/var/data/config/traefik-forward-auth/traefik-forward-auth.env` as follows:

|

||||

|

||||

```

|

||||

```bash

|

||||

PROVIDERS_GOOGLE_CLIENT_ID=<your client id>

|

||||

PROVIDERS_GOOGLE_CLIENT_SECRET=<your client secret>

|

||||

SECRET=<a random string, make it up>

|

||||

@@ -41,7 +41,7 @@ WHITELIST=you@yourdomain.com, me@mydomain.com

|

||||

|

||||

Create `/var/data/config/traefik-forward-auth/traefik-forward-auth.yml` as follows:

|

||||

|

||||

```

|

||||

```yaml

|

||||

traefik-forward-auth:

|

||||

image: thomseddon/traefik-forward-auth:2.1.0

|

||||

env_file: /var/data/config/traefik-forward-auth/traefik-forward-auth.env

|

||||

@@ -77,7 +77,7 @@ Create `/var/data/config/traefik-forward-auth/traefik-forward-auth.yml` as follo

|

||||

|

||||

If you're not confident that forward authentication is working, add a simple "whoami" test container to the above .yml, to help debug traefik forward auth, before attempting to add it to a more complex container.

|

||||

|

||||

```

|

||||

```yaml

|

||||

# This simply validates that traefik forward authentication is working

|

||||

whoami:

|

||||

image: containous/whoami

|

||||

@@ -114,7 +114,7 @@ Deploy traefik-forward-auth with ```docker stack deploy traefik-forward-auth -c

|

||||

|

||||

### Test

|

||||

|

||||

Browse to https://whoami.example.com (*obviously, customized for your domain and having created a DNS record*), and all going according to plan, you should be redirected to a Google login. Once successfully logged in, you'll be directed to the basic whoami page.

|

||||

Browse to <https://whoami.example.com> (*obviously, customized for your domain and having created a DNS record*), and all going according to plan, you should be redirected to a Google login. Once successfully logged in, you'll be directed to the basic whoami page.

|

||||

|

||||

## Summary

|

||||

|

||||

@@ -127,4 +127,4 @@ What have we achieved? By adding an additional three simple labels to any servic

|

||||

|

||||

[^1]: Be sure to populate `WHITELIST` in `traefik-forward-auth.env`, else you'll happily be granting **any** authenticated Google account access to your services!

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

--8<-- "recipe-footer.md"

|

||||

|

||||

@@ -10,7 +10,7 @@ While the [Traefik Forward Auth](/ha-docker-swarm/traefik-forward-auth/) recipe

|

||||

|

||||

Create `/var/data/config/traefik/traefik-forward-auth.env` as follows (_change "master" if you created a different realm_):

|

||||

|

||||

```

|

||||

```bash

|

||||

CLIENT_ID=<your keycloak client name>

|

||||

CLIENT_SECRET=<your keycloak client secret>

|

||||

OIDC_ISSUER=https://<your keycloak URL>/auth/realms/master

|

||||

@@ -23,8 +23,8 @@ COOKIE_DOMAIN=<the root FQDN of your domain>

|

||||

|

||||

This is a small container, you can simply add the following content to the existing `traefik-app.yml` deployed in the previous [Traefik](/ha-docker-swarm/traefik/) recipe:

|

||||

|

||||

```

|

||||

traefik-forward-auth:

|

||||

```bash

|

||||

traefik-forward-auth:

|

||||

image: funkypenguin/traefik-forward-auth

|

||||

env_file: /var/data/config/traefik/traefik-forward-auth.env

|

||||

networks:

|

||||

@@ -39,8 +39,8 @@ This is a small container, you can simply add the following content to the exist

|

||||

|

||||

If you're not confident that forward authentication is working, add a simple "whoami" test container, to help debug traefik forward auth, before attempting to add it to a more complex container.

|

||||

|

||||

```

|

||||

# This simply validates that traefik forward authentication is working

|

||||

```bash

|

||||

# This simply validates that traefik forward authentication is working

|

||||

whoami:

|

||||

image: containous/whoami

|

||||

networks:

|

||||

@@ -64,13 +64,13 @@ Redeploy traefik with `docker stack deploy traefik-app -c /var/data/traefik/trae

|

||||

|

||||

### Test

|

||||

|

||||

Browse to https://whoami.example.com (_obviously, customized for your domain and having created a DNS record_), and all going according to plan, you'll be redirected to a KeyCloak login. Once successfully logged in, you'll be directed to the basic whoami page.

|

||||

Browse to <https://whoami.example.com> (_obviously, customized for your domain and having created a DNS record_), and all going according to plan, you'll be redirected to a KeyCloak login. Once successfully logged in, you'll be directed to the basic whoami page.

|

||||

|

||||

### Protect services

|

||||

|

||||

To protect any other service, ensure the service itself is exposed by Traefik (_if you were previously using an oauth_proxy for this, you may have to migrate some labels from the oauth_proxy serivce to the service itself_). Add the following 3 labels:

|

||||

|

||||

```

|

||||

```yaml

|

||||

- traefik.frontend.auth.forward.address=http://traefik-forward-auth:4181

|

||||

- traefik.frontend.auth.forward.authResponseHeaders=X-Forwarded-User

|

||||

- traefik.frontend.auth.forward.trustForwardHeader=true

|

||||

@@ -89,4 +89,4 @@ What have we achieved? By adding an additional three simple labels to any servic

|

||||

|

||||

[^1]: KeyCloak is very powerful. You can add 2FA and all other clever things outside of the scope of this simple recipe ;)

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

--8<-- "recipe-footer.md"

|

||||

|

||||

@@ -36,7 +36,7 @@ While it's possible to configure traefik via docker command arguments, I prefer

|

||||

|

||||

Create `/var/data/traefikv2/traefik.toml` as follows:

|

||||

|

||||

```

|

||||

```bash

|

||||

[global]

|

||||

checkNewVersion = true

|

||||

|

||||

@@ -87,7 +87,7 @@ Create `/var/data/traefikv2/traefik.toml` as follows:

|

||||

|

||||

Create `/var/data/config/traefik/traefik.yml` as follows:

|

||||

|

||||

```

|

||||

```yaml

|

||||

version: "3.2"

|

||||

|

||||

# What is this?

|

||||

@@ -116,7 +116,7 @@ networks:

|

||||

|

||||

Create `/var/data/config/traefikv2/traefikv2.env` with the environment variables required by the provider you chose in the LetsEncrypt DNS Challenge section of `traefik.toml`. Full configuration options can be found in the [Traefik documentation](https://doc.traefik.io/traefik/https/acme/#providers). Route53 and CloudFlare examples are below.

|

||||

|

||||

```

|

||||

```bash

|

||||

# Route53 example

|

||||

AWS_ACCESS_KEY_ID=<your-aws-key>

|

||||

AWS_SECRET_ACCESS_KEY=<your-aws-secret>

|

||||

@@ -185,7 +185,7 @@ networks:

|

||||

|

||||

Docker won't start a service with a bind-mount to a non-existent file, so prepare an empty acme.json and traefik.log (_with the appropriate permissions_) by running:

|

||||

|

||||

```

|

||||

```bash

|

||||

touch /var/data/traefikv2/acme.json

|

||||

touch /var/data/traefikv2/traefik.log

|

||||

chmod 600 /var/data/traefikv2/acme.json

|

||||

@@ -205,7 +205,7 @@ Likewise with the log file.

|

||||

|

||||

First, launch the traefik stack, which will do nothing other than create an overlay network by running `docker stack deploy traefik -c /var/data/config/traefik/traefik.yml`

|

||||

|

||||

```

|

||||

```bash

|

||||

[root@kvm ~]# docker stack deploy traefik -c /var/data/config/traefik/traefik.yml

|

||||

Creating network traefik_public

|

||||

Creating service traefik_scratch

|

||||

@@ -214,7 +214,7 @@ Creating service traefik_scratch

|

||||

|

||||

Now deploy the traefik application itself (*which will attach to the overlay network*) by running `docker stack deploy traefikv2 -c /var/data/config/traefikv2/traefikv2.yml`

|

||||

|

||||

```

|

||||

```bash

|

||||

[root@kvm ~]# docker stack deploy traefikv2 -c /var/data/config/traefikv2/traefikv2.yml

|

||||

Creating service traefikv2_traefikv2

|

||||

[root@kvm ~]#

|

||||

@@ -222,7 +222,7 @@ Creating service traefikv2_traefikv2

|

||||

|

||||

Confirm traefik is running with `docker stack ps traefikv2`:

|

||||

|

||||

```

|

||||

```bash

|

||||

root@raphael:~# docker stack ps traefikv2

|

||||

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

|

||||

lmvqcfhap08o traefikv2_app.dz178s1aahv16bapzqcnzc03p traefik:v2.4 donatello Running Running 2 minutes ago *:443->443/tcp,*:80->80/tcp

|

||||

@@ -231,11 +231,11 @@ root@raphael:~#

|

||||

|

||||

### Check Traefik Dashboard

|

||||

|

||||

You should now be able to access[^1] your traefik instance on **https://traefik.<your domain\>** (*if your LetsEncrypt certificate is working*), or **http://<node IP\>:8080** (*if it's not*)- It'll look a little lonely currently (*below*), but we'll populate it as we add recipes :grin:

|

||||

You should now be able to access[^1] your traefik instance on `https://traefik.<your domain\>` (*if your LetsEncrypt certificate is working*), or `http://<node IP\>:8080` (*if it's not*)- It'll look a little lonely currently (*below*), but we'll populate it as we add recipes :grin:

|

||||

|

||||

|

||||

|

||||

### Summary

|

||||

### Summary

|

||||

|

||||

!!! summary

|

||||

We've achieved:

|

||||

@@ -246,4 +246,4 @@ You should now be able to access[^1] your traefik instance on **https://traefik.

|

||||

|

||||

[^1]: Did you notice how no authentication was required to view the Traefik dashboard? Eek! We'll tackle that in the next section, regarding [Traefik Forward Authentication](/ha-docker-swarm/traefik-forward-auth/)!

|

||||

|

||||

--8<-- "recipe-footer.md"

|

||||

--8<-- "recipe-footer.md"

|

||||

|

||||

Binary file not shown.

|

Before Width: | Height: | Size: 128 KiB |

Binary file not shown.

|

Before Width: | Height: | Size: 310 KiB |

@@ -8,7 +8,7 @@ hide:

|

||||

|

||||

## What is this?

|

||||

|

||||

Funky Penguin's "**[Geek Cookbook](https://geek-cookbook.funkypenguin.co.nz)**" is a collection of how-to guides for establishing your own container-based self-hosting platform, using either [Docker Swarm](/ha-docker-swarm/design/) or [Kubernetes](/kubernetes/).

|

||||

Funky Penguin's "**[Geek Cookbook](https://geek-cookbook.funkypenguin.co.nz)**" is a collection of how-to guides for establishing your own container-based self-hosting platform, using either [Docker Swarm](/ha-docker-swarm/design/) or [Kubernetes](/kubernetes/).

|

||||

|

||||

[Dive into Docker Swarm](/ha-docker-swarm/design/){: .md-button .md-button--primary}

|

||||

[Kick it with Kubernetes](/kubernetes/){: .md-button}

|

||||

@@ -44,7 +44,6 @@ So if you're familiar enough with the concepts above, and you've done self-hosti

|

||||

|

||||

:wave: Hi, I'm [David](https://www.funkypenguin.co.nz/about/)

|

||||

|

||||

|

||||

## What have you done for me lately? (CHANGELOG)

|

||||

|

||||

Check out recent change at [CHANGELOG](/CHANGELOG/)

|

||||